Back in the late ’90s when domestic television began to switch to HD, it seemed to me that 1080p was overkill, particularly as the average screen size in those days was around 32″. Who needed all those extra pixels, for heaven’s sake, I harrumphed.

Higher resolution meant you needed much more data per frame. More memory for the frame buffer and a faster processor to move it all around. All quite unnecessary.

I would concede that the old 576i PAL standard was improvable. But “720 is plenty”, I decreed. Let’s stop right there. There’s no need at all, either, to mess with the frame rate that’s stood us in good stead for the past hundred years.

And please don’t even mention 3D.

But here’s why I changed my mind…

THE FILM INDUSTRY STANDARD 24 frames a second (fps) does a good job of capturing motion without drawing attention to itself, but can get caught out when attempting to track fast movement. Instead of recording a well-defined image, individual frames trying to follow fast motion will register the moving subject with its details smudged, depending on the extent of travel during the twenty-fourth of a second exposure of each frame.

Played in sequence, these frames depict the moving object (or, during a fast pan, the entire scene) as unnaturally smeared. This representation of events is very different from the experience of an on-the-spot human observer. The human eye has the ability to observe fast motion in a series of short, almost instantaneous snapshots called “saccades” that effectively freeze each image. The brain then assembles these into the appearance of smooth motion, with all the detail, using a process that has been called “saccadic masking”.

Is this a cinematic technical problem that needs to be solved? On the face of it, no—“motion blur” has been a feature of cinematography for more than a century. It’s something the cinemagoer has become inured to over the years—that racing car speeding across the screen with blurred edges is as much a part of cinema grammar as out-of-focus backgrounds and bumpy hand-held tracking shots. In fact, film editing software will often include the ability deliberately to add motion blur.

On the other hand, many display devices like TV sets and projectors attempt to avoid motion blur with a technology variously described as “PureMotion”, “frame interpolation” or “motion smoothing.” This mimics the eye’s saccadic behaviour by synthesising extra frames between the source frames, emulating a much higher frame rate.

Why disrupt cinema grammar like this? The movie industry is not amused. Here’s what Tom Cruise and his director on Mission Impossible—Fallout, Chris McQuarrie, have to say about it:

Video interpolation or motion smoothing is a digital effect on most high definition televisions, and is intended to reduce motion blur in sporting events and other high definition programmes. The unfortunate side effect is that it makes most movies look like they were shot on high speed video rather than film, and is sometimes referred to as “the soap opera effect”. Without a side by side comparison, many people can’t quite put their finger on why the movie they’re watching looks strange…

In this digital age the distinction between high speed video and film is moot—there’s no obstacle to shooting movies at high frame rates. In fact, this has already been tried with feature films, with a result that the audience found “kitsch and alienating”, creating “a sickly sheen of fakeness”.

These were the judgements made by film critics (respectively the Independent and the Telegraph) of Peter Jackson’s part one of the Hobbit trilogy, shot, and on this occasion, shown at 48fps.

It may be that these high frame rates present “too much information” (TMI). And as T.S.Eliot eloquently puts it in his poem “Burnt Norton”;

“…human kind

Cannot bear very much reality…”

Clearly, you can apply this TMI argument as much to resolution as to frame rate. It was for a long time the key to my “720 is plenty” argument against 4K.

However, since then I’ve had to make two reluctant concessions.

I See the Point (Sharply)

My second concession was a much slower burn.

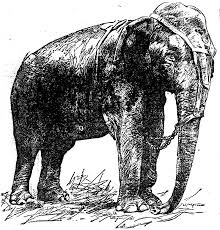

Several years ago, I came across a video clip of the electrocution of Topsy the Elephant. Belieing her name, Topsy was reputedly a dangerous rogue, having killed at least one person, variously described as an innocent bystander or as one of her keepers who had amused himself by stubbing out his cigar on her trunk.

Whether Thomas Edison actually arranged the electrocution is moot, but in January of 1903 he certainly sent his film crew down to Coney Island to film the event. And the footage was later released to the public as evidence that AC electrical distribution (by now established as the winner against Edison’s own DC in what has come to be called the Current Wars) was—as Edison had always proclaimed—potentially lethal.

You can read Topsy’s sad story in the Wikipedia. But why am I telling you all this here?

Audiences saw Edison’s film on a device called a Kinetoscope, queuing up one at a time to peer through a peephole at an illuminated moving image created by a travelling strip of perforated film. Their experience would have been rather clearer than the time-worn clip available today on YouTube. But we can imagine it pristine: monochrome and somewhat jerky as the cogs pulled the frames of the 50ft film strip at (I calculate) about 11fps.

Their Verdict: Astonishingly realistic.

In other words, a splotchy monochrome image jerking through a device you had to stoop down to was “plenty” for that early twentieth century audience.

Modern scholarship dismisses this story, probably correctly, as a myth. But it was a myth current at the time. This suggests that cinematography was then regarded as realistic enough for contemporary audiences to believe in the possibility of an oncoming train moving image creating such panic.

I think I can grasp what’s going on here. As a twelve-year-old, in 1953 I was taken round to the house of a rich aunt to watch the coronation of Queen Elizabeth the Second on a black and white television set. The screen was small, probably about 14″. But the experience was—to use the current jargon—completely immersive. My memory of the event is that I was actually there at the Coronation.

The key to understanding this, I think, is that video images mimic reality and we impose our own recreation of reality upon them. It’s that mental recreation we experience, with the images on the screen merely being the prompts. To the extent that we are able to dream the movie rather than just watch it, our enjoyment and involvement is increased.

This distinction between dreaming and watching explains, I think, why the Hobbit movie looked odd and why Cruise and McQuarrie are so concerned you switch off frame interpolation. We watch our movies with our minds in “dream mode”, our preferred way of absorbing a story. Sports are something else. No story but a wealth of detail to be gobbled up and processed in real time. They’re as different as night and day.

When we move from 24fps to 48fps, from PAL to 720p, from 720p to 1080p and again from 1080p to 4K we perceive a change. Not the (highly successful) step change from mono audio to stereo—if you’re old enough to remember that. Nor the leap from 2D to 3D, a step change we seem to have stubbed our toes on.

If the images are prompts to the dreams assembled within our perception, they are prompts that are shouting at us louder with each new development. They draw attention to themselves, waking us from our dream of the story. We are now experiencing the technology, not the art it is there to convey.

This thinking was what informed my “720 is plenty” argument, although I hadn’t at the time articulated it quite like this. If I had—if I’d conceived it in terms of shouted prompts and dreams—I might have understood sooner how the phenomenon of “the soap opera effect”, the “kitsch and alienating…sickly sheen of fakeness” may only be a passing phase.

Because we become inured to noise. It’s the novelty that distracts us. And the novelty wears off.

Oh, But 3D

This is place to mention 3D, another technology that fills the screen with extra information. It has failed twice in my lifetime: once as the red-green anaglyphics* of the 1950s and again in the digital resurgence of the 1990s. What went wrong with 3D? And are 4K and high-speed frame-rates doomed to the same fate?

We’ve evolved in a three-dimensional world. For millions of years, our eyes have been crucial to our survival, giving us perceptions of depth, distance and motion sufficient in most part to keep us away from tigers and alive, at least long enough to breed.

Reduced to its simplest, that invaluable mechanism of three-dimensional world awareness depends on two factors: the ability of our irises to focus and the rotational ability of our pair of eyes to converge on the point of interest. That muscular focussing and convergence information fed back to the brain simultaneously with the optical data gives us our measure of the space around us.

Crucial to this mechanism is the fact that our eyes focus and converge on the same point in space. Our calibration depends on this. It’s how our eyes work: focus and convergence geared together.

This is why around 10% of us start to get headaches at a 3D showing. And why children have been known to remove their glasses, preferring to enjoy the blur of superimposed images the raw screen presents. And it’s probably why 3D, in the ’50s and again in the ’90s, fell so seriously short of its expectations.

There’s something else awry with 3D as a story-telling technology. Murch doesn’t mention this, but neurologists have pointed out that 3D moving images trigger a quite different frame of mind in the audience, as compared with those viewing 2D movies.

Conventional films, however exciting the content may be, tend to lull our brains into something like a dream-state. As I’ve suggested above, the stories primarily unfold inside our own heads, just as they do in dreams. In this dream cinema we become unaware of the shortcomings of the technology—the fact that the pictures are being shown to us within a fixed frame, in short sections that chop around from view to view, and, for that matter, the way the perspective keeps jumping between closeup, mid-shot and long-shot.

Much of this is, as I’ve said, the learned grammar of film. But it’s been easy for us to learn because of this close relationship with dreams.

Movies shown in 3D present the brain with a quite different proposition. The 3D world we’re invited into doesn’t unfold in our heads. It’s out there. And as we watch objects and people shift around through the x, y and z axes, our brains—far from being lulled into a dream state—are stimulated into a heightened sense of awareness that triggers the autonomous trigonometry and calculus our brains evolved in ancient days.

How far away is that tiger? And moving how fast? How far up a tree can we get before she reaches us? And which is the nearest climbable tree?

The maths we’re unconsciously operating with when we watch 3D movies is even more complicated than that ancestral scenario, where the landscape and time were a continuous whole. As our 3D movie chops from shot to shot we have to recalculate the position of every object in view. That house partially visible on the left of screen is a different angle on the same house we’ve just been viewing in long shot. And so on.

If the object is in positive parallax we will still have heightened awareness of the edge, as compared with 2D, reminding us that we are looking at the scene as if it were enclosed in a box. This isn’t usually regarded as edge violation.

For sports, where the participants themselves need to be in this maths-heightened state of mind, there may well be much merit in our own brains having to calculate along with them. There may also be sequences in movies where the same remarks apply. A car chase, a fight, a tap-dance—any instance where the story stops to make way for dynamic showmanship. But if the intention is to wrap the audience in a story, 2D remains the governor.

Ever higher screen resolutions and faster frame rates (although subject, of course, to the law of diminishing returns) may distract us from our dreams for a while. But because they’re just “more of the same” and don’t require contortions to our mechanism of perception, in time—as I’ve now come to realise—we’re easily able to absorb them and get on with the story.

And with our accommodation to this new welter of information we may well begin to find that 720 is no longer plenty, in the same way that the “astonishing realism” of Topsy’s brief documentary now entirely escapes us.

Chris Bidmead: 17 Sep 2019