We concluded part 4 with all four of the F4-223 NAS’s drive bays populated and a very respectable total of around 22TB of securely redundant storage. “Redundant” means that any one of these drives can fail and we should be able to recover all our data.

We also mentioned in passing that although the F4-223 is more than adequate for its primary function as a network storage device, its hardware limitations unfortunately won’t allow us to explore the advanced features of the TOS 5 operating system. Some of the software Terramaster supplies at no additional cost is over-ambitious for this machine’s Celeron N4505 processor.

The F4-223 cleverly takes advantage of the advanced features of the N4505, like its encryption capability and its eight lane PCIe bus. Low power consumption is a valuable feature for a basic NAS. But the processor’s limitations became clear, for example, when we tested the facial recognition built into Terramaster’s Terra Photo app. TOS 5 puts up a warning that the process takes a long time and consumes a lot of resources. In the case of our F4-223 that “long time”, looks like being measured in months. We have left the AI facial recognition running ever since the publication of part 4 and it still has produced no results from our test collection of around 50 face photos.

So Part 4 seemed to us about as far as we would be able to go. However, we were left with the feeling that we still hadn’t done this NAS full justice. Its pair of M.2 slots remained empty. And its pair of 2.5Gb/s Ethernet ports were still unexploited.

These two features go hand in hand. The M.2 slots theoretically add fast storage to the system and the Ethernet ports should be able to make the most of this by being bonded together for a total of 5Gb/s.

It’s easy enough to mention this in a review, but to explore these features hands-on (which, after all, is what Tested Technology is all about) would need a couple of enterprise-class NAS NVMe drives and a second 5Gb/s capable device to put at the other end of a pair of bonded Ethernet cables.

Happily, our donors stepped up to help us on both fronts.

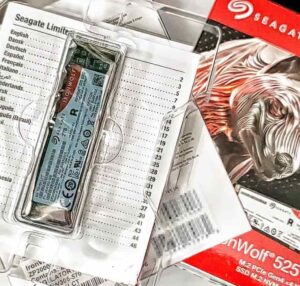

AT THE TIME OF WRITING, NVME DRIVES are getting cheaper by the minute. But high-endurance drives of the kind certified for use in a NAS are a different class from commodity drives and Terramaster only officially recommends a few brands.

AT THE TIME OF WRITING, NVME DRIVES are getting cheaper by the minute. But high-endurance drives of the kind certified for use in a NAS are a different class from commodity drives and Terramaster only officially recommends a few brands.

A possible solution to the Ethernet side of things lay with our Framework laptop, generously donated by the US company to Tested Technology last year for our Landing on Linux series. The whole machine is modular and the most immediate evidence of this is the arrangement of four slots in the chassis that accept a variety of plug-in expansion cards. Our laptop came with two USB Type C cards, a USB A card and a choice of HDMI or Display port for the fourth slot.

No Ethernet, as the internal (but, of course, modular) WiFi card supplied with Framework’s “DIY version” connects more than adequately for general purposes through WiFi 6e. But the company does sell Ethernet expansion cards. And, yes, these can handle 2.5Gb/s data transfer. So we could swap the HDMI card (mostly unused) and one of the USB Type C cards for a pair of Ethernet cards.

Framework has very recently revamped its shipping costs the Europe, thankfully dropping the initial price of around £25 per item to a very much more reasonable £10 to cover multiple items. The Ethernet expansion cards sell for around £35 each, so the total cost would be of the order of £80. But a couple of enterprise-class 2TB NVMes would be around £700.

As an ad-free Web publication, unaffiliated with any manufacturer, Tested Technology relies on no-strings donations from manufacturers of our test hardware. The F4-223 was one such donation, as were the two 22TB Seagate drives. Generous donations like these have managed to keep us running on empty for our nearly 10-year existence. And on this occasion, Seagate came through for us once more, donating a pair of NAS-certified IronWolf 525 2TB NVMe drives. And Framework pitched in with the donation of the the two 2.5Gb/s Ethernet expansion cards.

Installing the New Internal NVMe Drives

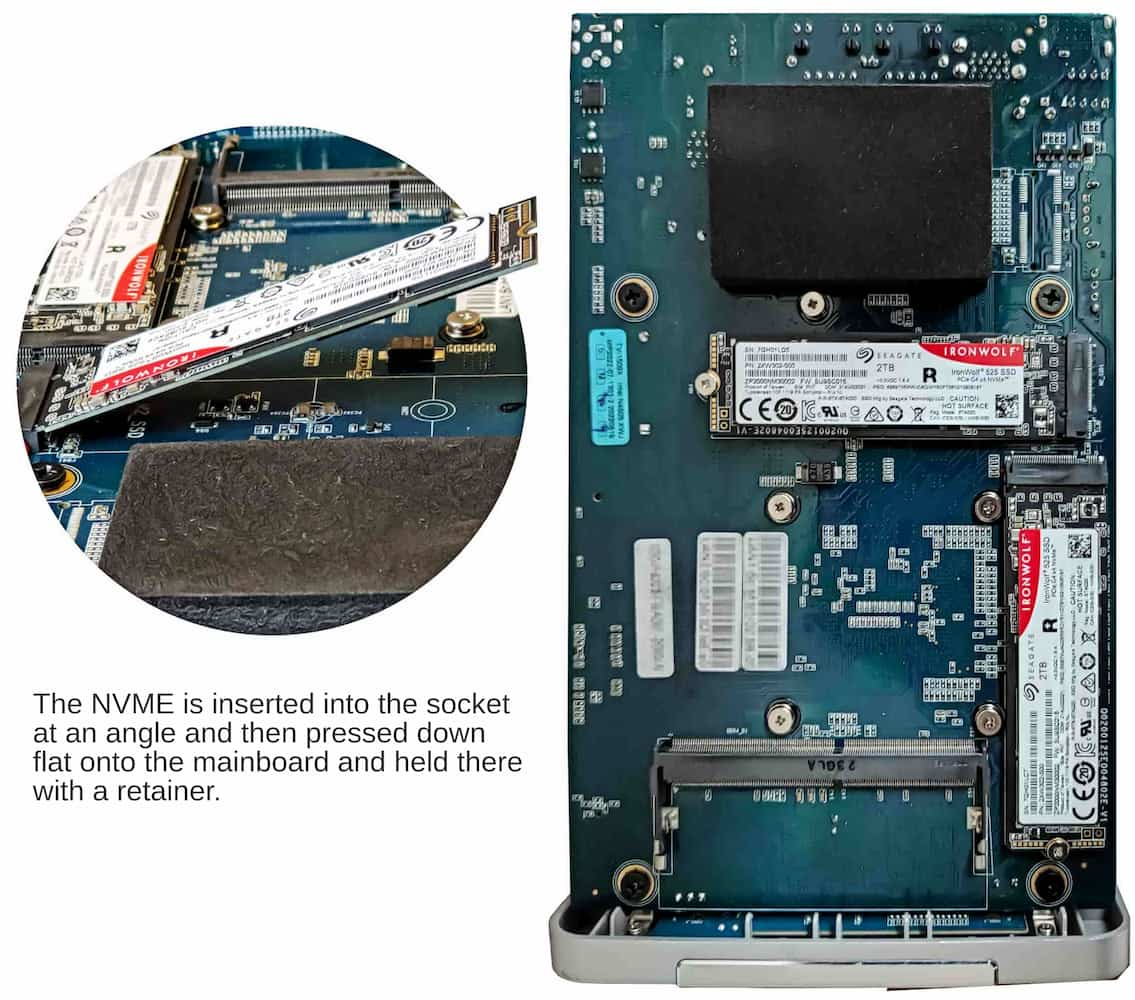

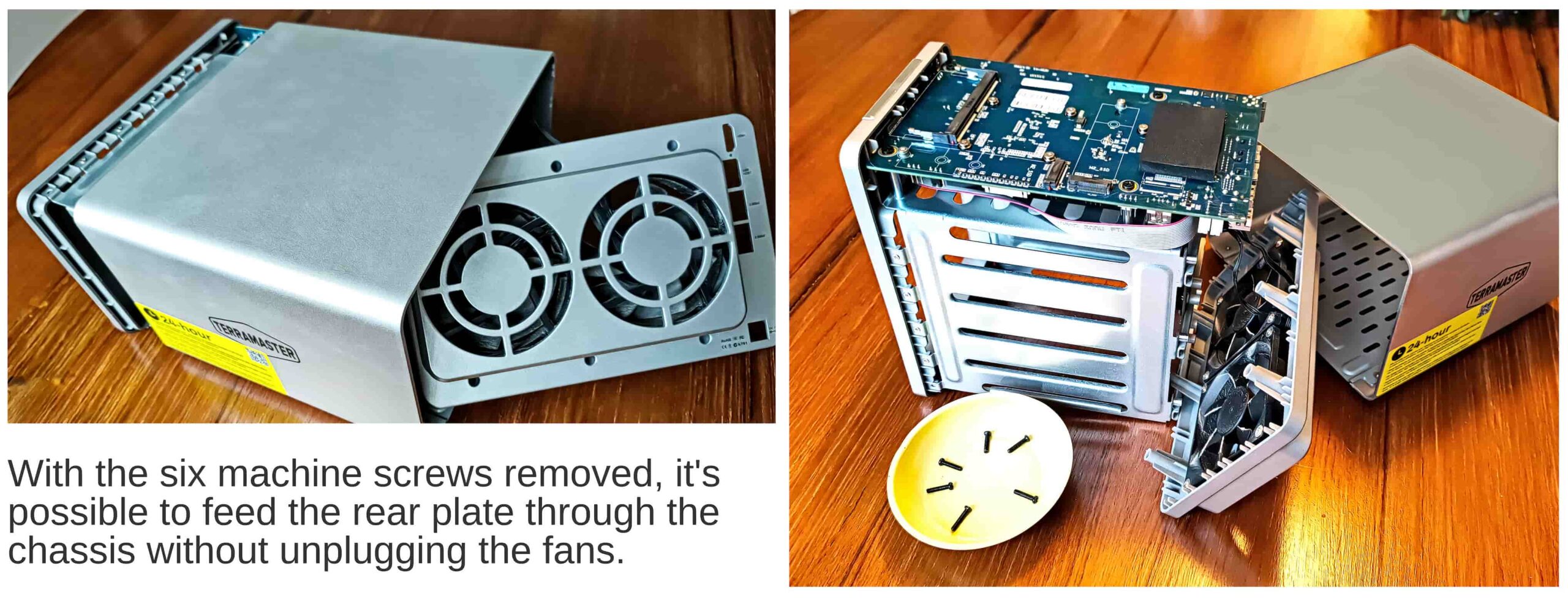

Fitting the tiny slivers of hardware to the F4-223 is more complicated than sliding hard disks into the drive bays. You need to remove the entire chassis from its case in order to get to the motherboard. The chassis is held in with six machine screws that run through the rear panel housing the two fans. These screws can be removed with the same Philips-head screwdriver that Terramaster used to supply with its earlier NASes, before it started using toolless drive carriers.

Fitting the tiny slivers of hardware to the F4-223 is more complicated than sliding hard disks into the drive bays. You need to remove the entire chassis from its case in order to get to the motherboard. The chassis is held in with six machine screws that run through the rear panel housing the two fans. These screws can be removed with the same Philips-head screwdriver that Terramaster used to supply with its earlier NASes, before it started using toolless drive carriers.

Two pairs of thin ribbon cables run from the chassis to the rear panel holding the two fans. After some careful experiment we managed to slide the chassis and the rear panel out through the case without disconnecting the cables. Turning the chassis on its side, we now had access to the motherboard and its two M.2 slots.

Two pairs of thin ribbon cables run from the chassis to the rear panel holding the two fans. After some careful experiment we managed to slide the chassis and the rear panel out through the case without disconnecting the cables. Turning the chassis on its side, we now had access to the motherboard and its two M.2 slots.

Storage or Cache?

TOS 5 allows solid state drives added like this either to function as cache or as primary storage. Caching means that no additional storage space is added, instead the NVMes behave as intermediaries between the existing hard drive storage pools and the outside world, a way station designed to smooth and speed the traffic.

But before investigating this, we wanted to test the effectiveness of the new addition as primary storage. And this meant setting up the two NVMEs as a second storage pool.

Synchronising the two NVMes to create Storage Pool #2 took approximately 5 hours—very much longer than we had expected. The drives have a rated speed approximately 5 to 6 times faster than the standard SATA 3 hard drives in the rest of the machine. According to Seagate’s specs the synchronisation (essentially formatting the two drives into a zeroed-out mirrored pair) should have been over in minutes rather than hours.

We ran some internal speed tests to throw some light on the puzzle.

Flexible Ins and Outs

Linux, on which TOS 5, like many other NAS operating systems, is based, was an extraordinary mind magnet for some of the best computing brains of the 20th century. Its originator, Linus Torvalds, began at the start of the ’90s with a modest terminal emulation program, which then became an operating system . Subsequently, borrowing hugely from Richard Stallman‘s nearly 8-year GNU project of Unix-like command line utilities, it became a rounded, complete (and completely free) operating system.

By the 21st century the mind magnet of Linux was metaphorically measuring thousands of gauss (the earth’s magnetic field is about 0.5 gauss). One of the very many geniuses it pulled in was Jens Axboe. Already renowned in the Linux community for his work on the kernel, Jens became more widely known for his development of a magnificent command line utility, fio.

Windows users can find a version for their operating system here. If you’re a Mac user, you’ll need to use the free Homebrew open-source software package management system.

The name fio stands for “flexible i/o tester” and adheres to the Unix tradition of terseness. The utility itself, however, doesn’t. Although putty in the hands of an experienced programmer, for the average computer user it’s a wild jungle of possibilities, with parameter options like caterpillar legs.

But it was the ideal approach to testing the raw speed of our new NVMe storage within the F4-223. And thanks to Terramaster, when we sshed into TOS 5 as administrator, fio was there waiting for us.

After several days of experimentation and extended consultation with Web resources, we came up with this:

fio --name=writetest --ioengine=libaio --rw=write --bs=128k --numjobs=32 \

--size=10G --runtime=60 --time_based --end_fsync=1 --directory=/Volume1/public/HDFolder

(The slash at the end of the first line escapes the carriage return, so this presents to the terminal as one long line.)

This command creates 32 10GB files in the ../HDFolder directory and then measures the rate at which they can be read back. We ran the same test on our ../Speedy directory on the NVMe storage.

Having done that, we edited the command line to test the write speed for each of the two storage media.

| NVMe (MB/s) | HD (MB/s) | % Delta | |

|---|---|---|---|

| Read Speed | 15.5 | 1.208 | 1183.11% |

| Write Speed | 244 | 19.0 | 1184.21% |

Writing turns out to be generally a faster operation than reading but in both, NVMe beats HD hands down.

Ethernet for the Framework Laptop

But in any case these internal read and write speeds are largely academic. This is network attached storage and we need to take network access speeds into account.

Our office Ethernet LAN here at Tested Technology runs at 1Gb/s. But if we avoided the LAN, a bonded pair of Framework Ethernet expansion cards wired directly into the F4-223 would give us 5Gb/s.

These Framework 2.5Gb/s Ethernet cards connect into the laptop with a standard USB Type C plug, which means they will also work with many other devices.

Would this be a sufficiently transparent speed? No, not on paper. Not nearly. But we’ve seen that the lane restriction means that the NVMes in the F4-223 run well below their manufacturer’s specs.

In fact this seems to be common practice when NVMe storage is associated with low-power processors. PCIe is designed to work like this but more clarity from manufacturers like Terramaster would certainly be helpful.

The Name’s Bond, LACP Bond

There are are various ways of uniting a pair of Ethernet ports. Some are proprietary but the most generally accessible is the cross-platform, open source Link Aggregation Control Protocol, or LACP. When Framework offered to donate the pair of 2.5Gb/s Ethernet expansion cards we knew enough about LACP to know that it would in some way double the bandwidth of the Ethernet connection. But we didn’t, as it turned out, know enough.

We’ll get to the speed tests in a moment. But here, up front, is the snag we encountered.

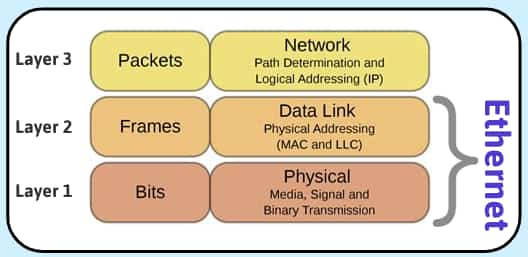

It’s easy to think of Ethernet as a continuous stream, like running water. But it’s actually a series of addressed packets of information, a string of separate envelopes. When LACP bonds a couple of Ethernet connections it doesn’t magically increase the speed of the individual connections. Neither does it, as we’d vaguely imagined, split the individual packets of information in two and send one half down one pipe while simultaneously sending the other half down the other pipe. No, when an Ethernet receiver gets a broken packet it throws it away and requests a resend.

It’s easy to think of Ethernet as a continuous stream, like running water. But it’s actually a series of addressed packets of information, a string of separate envelopes. When LACP bonds a couple of Ethernet connections it doesn’t magically increase the speed of the individual connections. Neither does it, as we’d vaguely imagined, split the individual packets of information in two and send one half down one pipe while simultaneously sending the other half down the other pipe. No, when an Ethernet receiver gets a broken packet it throws it away and requests a resend.

Resilient. But not speedy.

It’s perfectly feasible, however, that Ethernet might do much the same thing without splitting packets. One packet in the stream goes down one channel, the following packet goes down the other channel. Even if they arrive out of order, as very commonly will happen across the Internet, Ethernet is specifically designed to cope with that. Received packets over wide connectionions like the Internet are accumulated in the receiving system’s “resequencing buffer” where they’re sorted into their original order

But LACP doesn’t use resequencing buffers. Why? As you can imagine, pouring the received packets into a pool for re-sorting before they get passed on is time consuming (introduces latency, as the techies call it). LACP aims to keep things moving quickly by frog-marching the packets in their strict order down a single pipe.

Of course, that restrains them to the speed of the pipe, 2.5Gb/s in our case. But you can attain your overall 5Gb/s by simultaneously sending some second stream down the other pipe.

Of course, that restrains them to the speed of the pipe, 2.5Gb/s in our case. But you can attain your overall 5Gb/s by simultaneously sending some second stream down the other pipe.

Yes, bottom line, the bonded 5Gb/s only kicks in when you’re dealing with more than one stream of Ethernet data.

Meaningful Metrics

The fio utility gives us a valuable insight into the internal capabilities of the hardware. But now that we know how to bond the two 2.5Gb/s Ethernet ports, we’re looking for a speed test that meaningfully reflects the value of adding NVMe storage to the NAS.

Using our bonded Ethernet link, and assuming we can meaningfully max out both streams simultaneously, the best possible speed for shifting data on and off the NAS will be 5Gb/s, or just 0.625GB/s. So, clearly, we have a bottleneck here, even with the NMVes throttled down to a single lane. What’s the best software for assessing the real speed of getting data on and off the F4-223?

Using our bonded Ethernet link, and assuming we can meaningfully max out both streams simultaneously, the best possible speed for shifting data on and off the NAS will be 5Gb/s, or just 0.625GB/s. So, clearly, we have a bottleneck here, even with the NMVes throttled down to a single lane. What’s the best software for assessing the real speed of getting data on and off the F4-223?

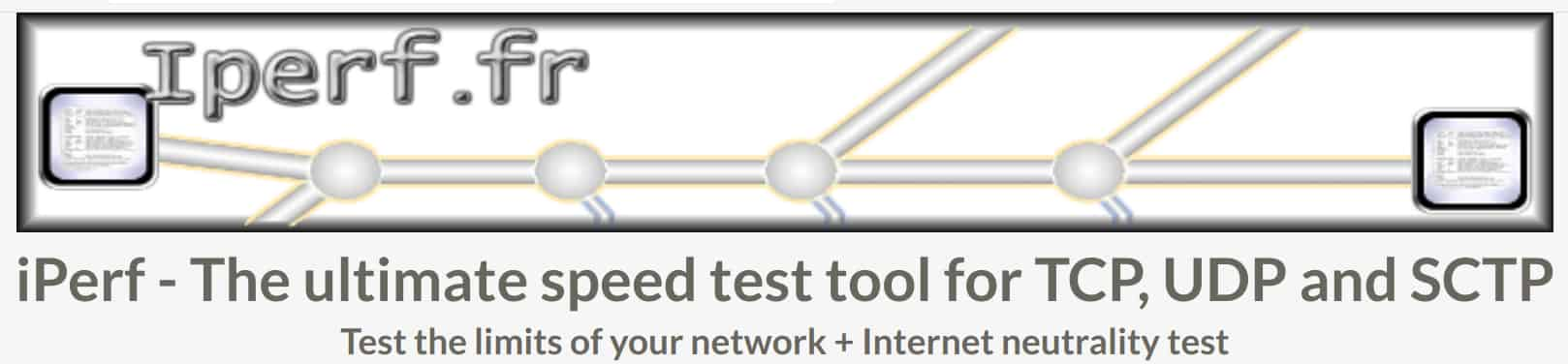

Happily, wise minds are ahead of us here. iperf3, a cross-platform command line application, emerged from work done at the University of Illinois towards the beginning of this century. It’s been refined over the years to cover most of the basic issues relating to Ethernet data transfer. Initially, iperf only tested the Ethernet transfer rate, conjuring data out of the blue, shooting it across the Ethernet connection, and then just letting it evaporate at the receiving end. But the arrival of iperf3 in 2014 introduced the -F parameter, allowing the utility optionally to pull data out of storage and, if required, write it out onto storage at the receiving end.

iperf3‘s big advantage over the graphical user interface speed tests generally used is that it gives you ways to test the transmission rate independently of the file transfer rate. This can tell you up front whether your connection to the storage device is going to be a bottleneck, which is what we expected might be the case with our setup.

The app has a downside. You need to be running it at each end of the Ethernet link. Installing iperf3 on our Ubuntu-based Framework laptop would be easy enough—binary versions for Linux, Mac and Windows can be downloaded from the French Website. But there was no sign there of a version for our TOS 5 NAS.

As the F4-223 is Intel-based hardware running an operating system built on Linux we used to see if there was a chance that we could install the app there. No need—

Terramaster was there ahead of us. iperf3 is already one of the provided system tools.

iperf3 In Action

Getting the two streams to go simultaneously down two different LACP links needs some careful preparation. We had to make sure the bonded link at the transmitting end sent its streams from two different IP addresses and that the bond would be able to use these different addresses to decide which of the two bonded links to send its stream down.

It turns out that you can load up an individual Ethernet link with as many different IP addresses as you like. And that also goes for a bonded link. So with an appropriate iperf3 command line you can send two simultaneous streams from two different addresses over the same bonded link.

But unless the bond is told to use these different addresses to route the streams they’ll end up being piped down a single 2.5Gb/s link. LACP attaches a hash number to each packet to decide its route and the default hash algorithm (described simply as “level2”) ignores the sending IP addresses. So you need to ensure that the xmit_hash_policy is “level2+3”.

At the NAS end you can start iperf3 as a server:

iperf3 -s

This sets it running, listening for input on the default port of 5201 (changeable with a command line parameter). But in our case the NAS is going to be accepting two streams simultaneously, which means we’ll need to be running two different iperf3 servers on two different ports. So:

iperf3 -s & iperf3 -s -p 5202

At the client end, on the Framework laptop, we send the two separate streams from each of the bond’s two separate IP addresses. We could enter this at the client prompt as a long command line but it makes more sense to create a bash file:

#!/bin/bash # Send 1GB of data to the first IP address and port iperf3 -c 192.168.0.207 -p 5201 -n 1G -T "Stream to 5201" -B 192.168.0.190 & # Send 1GB of data to the second IP address and port iperf3 -c 192.168.0.207 -p 5202 -n 1G -T "Stream to 5202" -B 192.168.0.191 & # Wait for both processes to finish wait

The -c parameter defines the target address on the server. -p is the port number, using the default for the first stream and port 5202 for the second stream. The -n parameter asks iperf3 to whip up a gigabyte of data out of thin air and the -T is there as a tag to distinguish the two streams in the diagnostic output. The final -B parameter defines the sending address for that particular stream, to ensure that each stream uses its own individual half of the bonded Ethernet link.

Instead of using magically evaporating data, which only tests the speed of the link, we can optionally persuade iperf3 to write those data to storage on the server. We do this by adding the parameter -F to the server command:

iperf3 -s -F ./incoming3a & iperf3 -s -p 5202 -F ./incoming4a

Now we can change directories on the server between a hard drive directory and an NVMe directory to compare write speeds between the two media.

-F parameter to the client command and use it to name a pre-existing file on the client’s storage (now the NAS) , iperf3 will pick up that file and transfer it through the Ethernet link. We’re not interested in how fast the Framework laptop saves this file on its own NVMe drive, so there’s no -F parameter at the server end.

The read/write results this test produced were somewhat unexpected:

| Test Description | Data Rate (Mb/s) | Time taken (secs) |

|---|---|---|

| no R/W xfer over the LACP link | 4700 | 3.7 |

| Read from F4-223 NVMe | 2441 | 7.3 |

| Read from F4-223 Hard Drive | 1606 | 12 |

| Write to F4-223 NVMe | 240 | 35.9 |

| Write to F4-223 Hard Drive | 4.91 | 1750 |

The first row simply tests the transfer rate of the bonded Ethernet link and confirms that it’s running at something close to the theoretical maximum. The four rows below that show that the NVMe-based storage is usefully more responsive than the spinning rust. Particularly in the case of the bottom two rows where we’re writing to the storage. In fact, the difference here is frankly staggering!

What’s going on?

Why the Long Write?

Our fio tests established that reading is inherently faster for both types of media. But here we’re seeing the reverse.

The first thing to recognise is that our test isn’t using an ordinary write process. iperf3 is sending chunks of the source file piecemeal across the link and—crucially—each chunk is waiting to be sent until the previous chunk has been written to storage. Typical writes in a working situation will be buffered in memory and then dumped all of a piece onto the storage. iperf’s intermittent streaming amplifies the distinction between solid state and spinning rust write times, drawing attention to what’s really happening under the hood.

Then there’s the fact that Terramaster’s TRAID configuration, effectively RAID 1 for both the hard disk and the solid state storage, is carrying out each write process twice, mirroring the data.

BTRFS transfer speeds in comparison with two other popular Linux file systems. Acknowledgements to the Phoronix test suite..

On top of this, we’ve formatted all the F4-223’s storage as BTRFS. BTRFS uses copy-on-write, a process that avoids overwriting old data with new. It may be that this is slowing the write process for both SSDs and HDs (but with a significant advantage for data security).

Although we didn’t test the NVMes outside the Terramaster NAS, it’s a fair assumption that the specs provided by Seagate are trustworthy enough to support the assumption that the storage itself is not contributing to the bottleneck.

Real Life Situation

Running a bonded pair of Ethernet links isn’t what the Tested Technology LAN is going to be doing during the working day. It was time to come down to earth and see how the new NVMe storage behaves with our regular LAN against the established HD storage.

So we repeated the iperf tests over our standard 1Gb/s Ethernet connection.

| Buffer Size | HD Write (Mb/s) | NVMe Write (Mb/s) | HD Read (Mb/s) | NVMe Read (Mb/s) | Write Delta % | Read Delta % |

|---|---|---|---|---|---|---|

| 4K | 0.197 | 3.97 | 3.85 | 3.73 | 1915.23 | -3.12 |

| 64K | 5.09 | 55.2 | 61.6 | 62.8 | 983.89 | 1.95 |

| 128K | 8.09 | 84.8 | 89.9 | 81.4 | 948.09 | -9.46 |

Conclusion

Our test results have something important to tell us about the impact of solid state storage on the traditional HD-based NAS market. Battle-lines between HDs and SSDs are being drawn up, and this is something we’ve touched on in our piece on Seagate’s CORVAULT.

Hard disks still offer a far better price per terabyte than solid state drives. And new hard drive features like HAMR look like keeping that gap open for years to come. But new all-solid-state NAS drives are beginning to appear in the consumer and prosumer market and we hope to be able to follow this development in the coming year.

Meanwhile, we’ve taken Terramaster’s TOS 5 about as far as it can go with the hardware available to us. TOS 6 is imminent, Terramaster tells us and we look forward to writing this up in the fullness of time.

Chris Bidmead