This isn’t a Tested Technology review. I didn’t get to test the technology. I glimpsed it in an air-conditioned basement at Seagate’s Amsterdam HQ just outside Schiphol airport after attending a two hour press conference learning what it does and how it does it.

We also discussed other things than the product itself. As an enterprise staple, the CORVAULT verges on the conservative in respect of the core technology, the hard drives themselves. But during the workshop we also talked about new hard drive developments scheduled for future generations of the CORVAULT.

Because of the brevity, the absence of hands-on experience, and theoretical discussions about the future of hard drives, the whole one-day event was as far as you can imagine from being material for a Tested Technology review.

I’m writing it up here for two reasons. The less interesting reason is that Tested Technology is returning a favour. Seagate has donated many terabytes of storage to our recent NAS reviews. Without those drives the reviews would have had much less to say. So the present article is a sort of courtesy to the company—still, I hope, observing our house rules of writing at arm’s length from the manufacturer and putting our readers front and centre.

Which leads us to the more interesting reason that Seagate’s CORVAULT is taking up space here.

I don’t expect the average Tested Technology reader to care much about a direct attached storage rack system jam packed with 106 hard drives and costing many tens of thousands of dollars. Even if you’re a small schoolboy with his nose pressed to the shop window (a mode I confess I was in as we descended to the basement), the Seagate CORVAULT isn’t something you’d consider taking home. Installed domestically, the noise this thing makes with all drives spinning and its hot-swappable fans powered up—despite Seagate’s cunning “Acoustic Shield™” technology doing its best to keep it cool and quiet—would destroy any marriage. And this publication doesn’t (yet) need petabytes of storage, even if we could work out how to connect it to Tested Technology’s LAN.

The Google server farm at The Dalles, Oregon. A forest of multicoloured LEDs marking the traffic of the world’s data.

Enterprises do need to store masses of data. In fact, Seagate suggests they should be storing much more data than they are at the moment, that they’re jettisoning potentially valuable information because they don’t have enough room for it. On present trends, by 2026 companies at large will be retaining 15ZB of valuable data and letting 206ZB evaporate. You won’t be surprised to learn that Seagate is on a mission to help enterprise preserve much more of these data.

When I first owned a computer in the early 1980s, storage was measured in kilobytes (KB). By the mid-’80s, kilobytes had become megabytes (MB), each MB being 1024 KB. (My first ever hard drive had a capacity of 5MB.) Ten years later, that same magic multiplier, 1024, had taken megabytes to gigabytes (GB). And today, multiplying again by 1024, we’re now used to thinking in terms of terabytes (TB).

But that multiplying shows no signs of stopping. Where enterprise leads, consumers follow. And enterprise is already operating in terms of that next round of multiplication, petabytes (PB) and onward thereafter to zettabytes (ZB).

Perhaps because, at heart, Seagate aims to achieve this goal with the same underlying technology that’s in the consumer and semi-pro NASes we’re writing about here. Only a few years ago this would have been the same technology that stores the data in your PC or laptop, but solid state drives (SSDs) have changed all that. And, yes, SSDs are now showing up in NASes too. But the cost-benefits of rotating hard drives as data storage seem set to stay well ahead of solid state drives into the foreseeable future.

Drive manufacturers like Seagate and its main direct rival, Western Digital, are predicting that over the next five years or so, within the familiar 3½ inch, half height form factor of today’s (and last century’s) rotating drives, internal technology developments will see a radical expansion of usable storage space. The hard drive you buy in 2026 will physically resemble the hard drive you bought last year. It will interface with your operating system in exactly the same way. And it will probably cost about the same. But its capacity will be 50TB or more!

Drive manufacturers like Seagate and its main direct rival, Western Digital, are predicting that over the next five years or so, within the familiar 3½ inch, half height form factor of today’s (and last century’s) rotating drives, internal technology developments will see a radical expansion of usable storage space. The hard drive you buy in 2026 will physically resemble the hard drive you bought last year. It will interface with your operating system in exactly the same way. And it will probably cost about the same. But its capacity will be 50TB or more!

How do you turn a hard drive into a TARDIS, nearly trebling its capacity while leaving it the same size and shape? The TARDIS uses “Dimensional Transcendentalism”: the inside of the TARDIS exists in one place in space and time, while the outside is in another dimension, somewhere else, and at some other time. I don’t know if Seagate ever tried that, but the solution they’ve come up with makes a lot more sense:

If you want to cram more things into the same volume, make the things smaller.

Out-TARDISing the TARDIS

This has been the strategy behind the increase in drive capacity over the past three decades or more. We can think of the stored bits of data, the ones and zeros packed onto the concentric tracks on the surfaces of the platters, as tiny clusters of magnetisable granules. Year on year technological development has enabled manufacturers to shrink the size of those tiny spots, moving them closer together, placing more and more of them on to the same length of track. As well as making the tracks thinner and moving them closer together.

More bits on the platter

The big breakthrough was the discovery in the late 1980s of the (GMR) that allowed for a massive reduction in physical bit size on the platter. This became a commercial reality a decade later, increasing the storage density by an astonishing (approximately) two orders of magnitude.

Two orders of magnitude is 100 times, which doesn’t quite account for the shift from megabytes in 1990 to gigabytes in 2000 and on to terabytes as the new millenium wore on. Other factors include the adoption of perpendicular recording and an increase in the number of platters per drive.

Perpendicular recording had first been demonstrated in the lab in the mid-1970s but only became a commercial reality in 2005. Instead of defining ones and zeros by the horizontal magnetisation of the cluster across the flat surface of the platter, the new orientation magnetised the cluster vertically. You’ll get the picture if you think of a series of tiny bar magnets that instead of lying nose to tail around the track, turn through ninety degrees and point up and down, and so can be packed closer together.

Perpendicular recording had first been demonstrated in the lab in the mid-1970s but only became a commercial reality in 2005. Instead of defining ones and zeros by the horizontal magnetisation of the cluster across the flat surface of the platter, the new orientation magnetised the cluster vertically. You’ll get the picture if you think of a series of tiny bar magnets that instead of lying nose to tail around the track, turn through ninety degrees and point up and down, and so can be packed closer together.

(The picture shows a different coating on the platter when we switch to perpendicular recording. Typically we’re now using CoCrPt, an amalgam of cobalt, chromium and platinum. But the industry still refers to this affectionately as “rust”.

Oh, and please treat that picture as little more than a simplistic analogy. The physics is a tougher proposition I’m not going to go into here. Even if I could.)

More platters on the spindle

A decade ago, this would typically be six platters, providing 12 magnetisable sides, spinning in an atmosphere of filtered air.

The air gets in the way. With the platters spinning, air tends to turbulence, which sets the platters wobbling. When I first heard about this I though this was a problem Joseph Swan had solved with the invention of the light bulb. To stop the filament burning up Swan got rid of the air. Vacuum. Job done.

The air gets in the way. With the platters spinning, air tends to turbulence, which sets the platters wobbling. When I first heard about this I though this was a problem Joseph Swan had solved with the invention of the light bulb. To stop the filament burning up Swan got rid of the air. Vacuum. Job done.

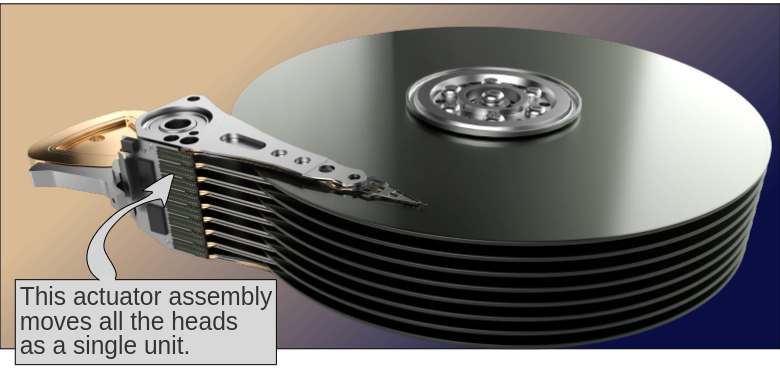

Luckily, I wasn’t in charge of hard drive design. The filtered air inside a hard disk drive has a vital job to do. It’s the secret of that 5nm gap between the actuator head and the platter. The head maintains its flying height literally by surfing on the thin film of air between it and the platter. No air would make the platter a no-fly zone.

Unless…

…instead of a vacuum, you use a lighter gas. At room temperature and pressure, a litre of dry air weights around 1.3 grams. Under the same conditions, the same volume of helium weighs less than 0.2 grams. But the presence of helium is enough to keep the heads flying, while its very low density minimises turbulence. And hard drive designers have discovered that if you pump out all the air and pump in helium instead, you can pack in twice the number of platters.

The Status Quo and Beyond with HAMR

That’s the story behind today’s 22TB Seagate IronWolf drives like the one we added to our 4-bay Terramaster drive in part 3. Seagate’s current shipping configuration of its 2.1PB CORVAULT uses the previous generation of 20TB HDDs, labelled as enterprise class Exos drives. Fully populated, the whole installation costs around $59,000. By the end of this year Seagate expects to offer a 2.5PB version with the same number of drives but now using HAMR.

That’s the story behind today’s 22TB Seagate IronWolf drives like the one we added to our 4-bay Terramaster drive in part 3. Seagate’s current shipping configuration of its 2.1PB CORVAULT uses the previous generation of 20TB HDDs, labelled as enterprise class Exos drives. Fully populated, the whole installation costs around $59,000. By the end of this year Seagate expects to offer a 2.5PB version with the same number of drives but now using HAMR.

Heat-assisted Magnetic Recording is the technology Seagate is banking on to take storage into the future. How does it work to pack the data more closely?

Current conventional magnetic recording writes each bit to a tiny cluster of grains of what we still like to call “rust”. Each cluster is made up of a hundred or so grains. There’s a delicate balance to be struck in choosing how many grains to include in each cluster, that is to say, the size of each bit on the platter. It needs to be large enough to ensure that the state:

- can be switched reliably with minimal energy expenditure

- will remain stable after the write

- can reliably be measured during the read

For any given bit size (grains per cluster) these factors will depend on the coercivity of the grain material. This is a measure of how difficult it is to demagnetise the material. You can think of this as a question of how much power will be needed to flip the bit from a one to a zero, or vice versa.

If you use a material with low coercivity that makes bit-flipping easy, the head will need lower power during the write cycle and you can get away with fewer grains per cluster. So you can pack the bits closer together. But bits that flip easily can’t necessarily be trusted to retain your data.

And, after all, data retention is what the drive is there for. To guarantee this, you’re looking for high coercivity. So the ideal grain cluster will have low coercivity when you write to it and then switch to high coercivity immediately after for data retention and subsequent read out.

Is this asking too much from a tiny patch of rust?

Hence HAMR.

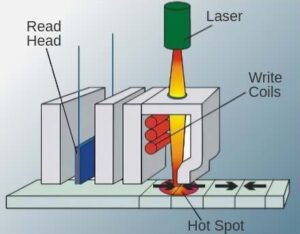

The question then becomes: how do you pour energy into a tiny cluster of grains without heating up the neighbouring clusters (lowering their coercivity and making them vulnerable to data loss)?

This might sound like a tough call, but we’ve been doing something of this kind in cheaply available consumer equipment since the arrival of the CD player in 1982. In fact, the low-power semiconductor laser was discovered 20 years before that when researchers from IBM, independently and almost simultaneously with researchers from General Electric and MIT’s Lincoln Laboratory, demonstrated laser action using the semiconductor gallium arsenide.

The “L” in the stands for light.

The “L” in the stands for light.

The special feature of the laser is that the energy emitted is coherent. That is to say all the waves emanating from it share the identical frequency (colour) and will be exactly in phase. This coherence keeps the beam tight and narrow, concentrating its energy on the target.

You might think this means that it efficiently hits the spot. In the simplistic version of the HAMR story (as shown in the diagram and as propagated by Seagate themselves), yes, the magnetisation target is rapidly and briefly (very briefly—we’re talking nanoseconds) heated by the laser beam.

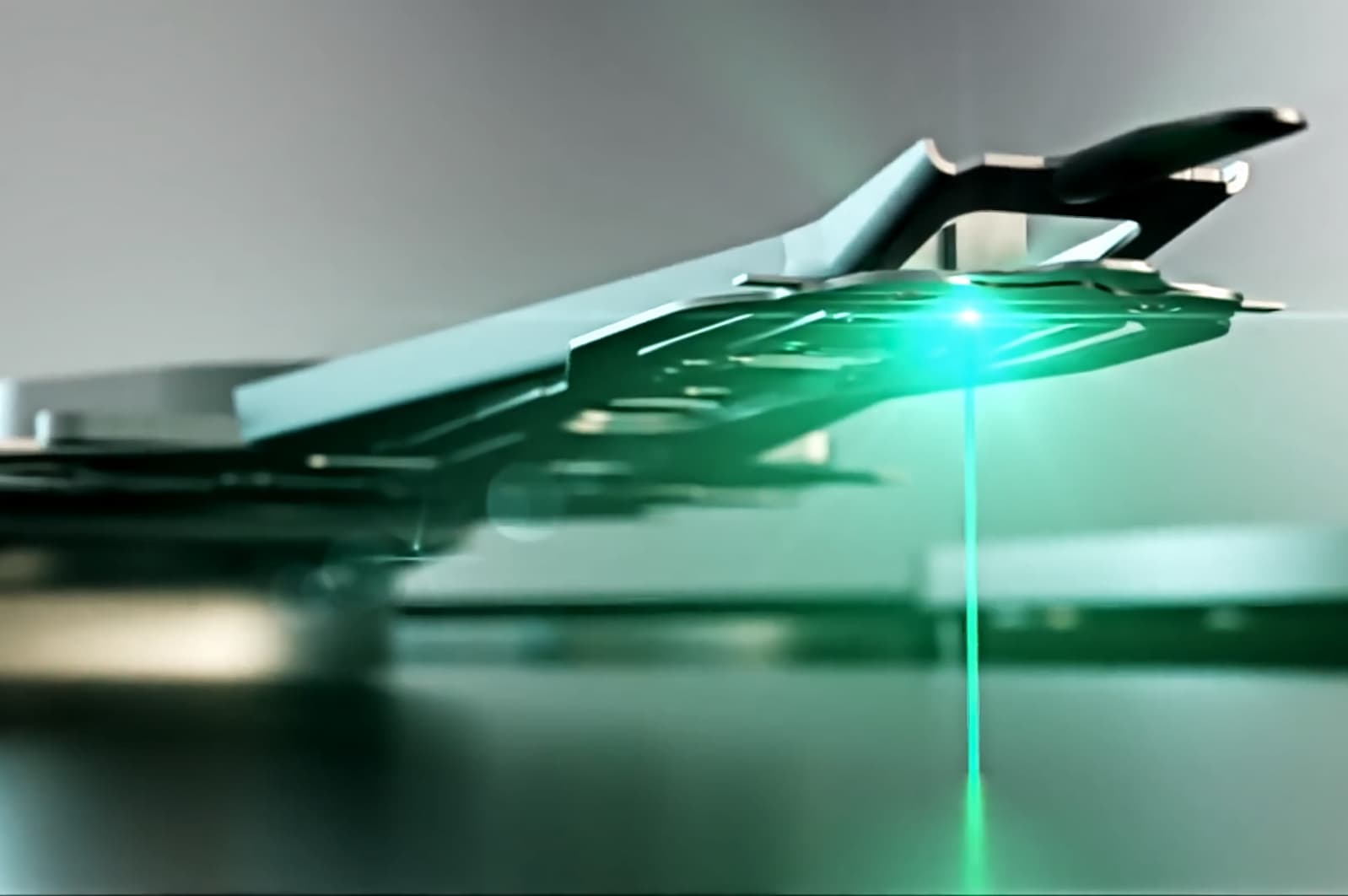

This imaginative reconstruction, taken from one of Seagate’s own promotional videos, illustrates the misleading idea of direct laser heating. The head seems to me far too distant from the platter for any near-field effects to come into play and the green laser beam is shown as the sole source of heat.

But in the labs, no such luck. The HAMR bit size needs to be significantly smaller than the 10nm diameter bit used in current conventional perpendicular recording. But a phenomenon called “the Abbe diffraction limit” prohibits the laser beam focusing down to those dimensions. This may well have been one of several problems that delayed HAMR from its predicted arrival on the general market around 2017.

The Abbe diffraction limit says that if you want to heat a tiny sub-10nm target, you can’t do it using photons. Other means of writing smaller bits were tried in the labs. For a while microwaves looked promising. Even ultrasound was on the cards.

And then the plasmonic nanoscale antenna came to the rescue. I cherish this Doctor Whoish name for the technology but it’s more commonly known as a near-field transducer (NFT). Imagine a wave guide, like the barrel of a gun. The energy goes into it as photons and emerges as surface plasmons. This modified form of energy can be focussed to the sub-10nm dimensions we need for our HAMR hot spot, creating a highly localised transient temperature somewhere between 450⁰C and 500⁰C.

So for HAMR you use a high coercivity ultrathin coating of an iron-platinum alloy on the platters, add a miniature laser smaller than a grain of salt to each read/write head and use that to fire photons into an NFT that transforms them into a tightly focussed oscillating surface charge that locally flash heats a sub-diffractive area of the substrate a few nanometers in diameter.

Easy when you know how.

CORVAULT’s Special Sauce

I’ve mentioned that the core technology in CORVAULT’s drives clings to tradition and is not yet tempted to explore the extreme capacities of Seagate’s latest hard disks. Arguably, with 106 individual drives under its control, capacity isn’t going to be a major issue.

And, in any case, running 106 individual drives presents its own problems.

And, in any case, running 106 individual drives presents its own problems.

My first purchase of a house, when I moved out of my two room apartment, seemed to coincide with a noticeable deterioration in the manufacturing quality of light bulbs. Instead of having to buy new light bulbs every two years, I now had to swap one out every six months or so. It took me some time to realise that more rooms meant more light bulbs which meant more opportunities for light bulb failure.

(So I’m blaming Joseph Swan for my error here too.)

More hard drives mean more chances of hard drive failure. The failure of a drive doesn’t lose your data if you’ve distributed those data in the form of parity redundantly across the drives. However, it does raise an issue we’ve discussed here before.

The idea of using multiple drives that keep an eye on each other’s data mathematically to insure against drive failure is broadly RAID (Redundant Array of Inexpensive Drives). This caught on in the 1980s when those inexpensive drives became readily available. It meant you could replace a failed drive and rebuild its data from the redundant information on the other drives.

But this was back when hard disk drive capacity was still being measured in megabytes. The rebuild might take a few hours. Rebuilding one of those much higher capacity drives in the CORVAULT could take very, very much longer.

The risk during a rebuild is that a second drive, working flat out to help with the rebuild, might also fail, possibility jeopardising the whole array. And the larger hard drives become, the longer the rebuild and the greater the risk. So something needs to be done to speed up drive rebuilds.

ADAPT

ADAPT is one of those marketing-manufactured acronyms, explained as “Autonomic Distributed Allocation Protection Technology”. It’s a proprietary form of RAID for big arrays, replacing it with a protection scheme that takes full advantage of its centralised control over a huge number of independently activated sets of read/write heads.

The scheme claims to provide superior and easy to manage data protection for very large arrays. What particularly interested me though was ADAPT’s solution to the problem of rebuilt time when replacing a failed drive.

ADAPT implements a “distributed hot spare” arrangement. A “hot spare” is an empty drive standing by to take over when any one drive fails. A “distributed hot spare” is a virtual drive made up of slivers of multiple physical drives. ADAPT rebuilds the downed drive’s data on this distributed hot spare, rather than writing all the data to a single physical drive. Later, all these reconstituted data on the virtual drive can be copied across to a new physical drive.

Why complicate the rebuild with this intermediate virtual drive? The parity data striped across multiple drives that the rebuild will rely on already constitutes a virtual drive. The whole idea of RAID is that all those data are still accessible even though the physical drive where they were stored is kaput.

But while they’re stored only as parity, those data have no redundancy. Once the rebuild to the virtual drive is done, redundancy has been restored. Whew!

But, hang on. We’re trying to speed up the rebuild. How does doing the rebuilt twice (once to the virtual drive and later to the replaced physical drive) solve that problem?

Here’s the trick. The first rebuild, to the virtual drive, is very fast. There’s no hurry about the second, much slower, rebuild to the physical drive, because redundancy has already been secured. It can take place at a leisurely pace in the background.

However, when you write your data to a virtual drive you’re striping it across multiple physical drives, each with its own actuator head assembly. You can stream the data in parallel to these drives as you read the data in parallel off the various drives holding the parity. Depending on the number of drives you’ve chosen to stripe your virtual hot spare across, this first rebuild can be very fast indeed.

ADAPT is Seagate’s proprietary array solution but its basic architecture seems to be similar to Terramaster’s TRAID, and probably not unlike what Synology is doing with SHR (Synology Hybrid RAID). The conventional RAID concepts are down there in the foundations but with 106 drives to play with Seagate has huge flexibility to juggle its RAID strategies.

ADR

We’ve discussed how Seagate’s ADAPT improves on this scenario. But I haven’t yet mentioned what impressed me most of all during the workshop.

Over my more than 40 years as an IT journalist I’ve had many a drive fail on me. In the old days typically the head would nose dive onto the platter, filling the room with a terrifying banshee screech as it plowed into the surface coating. Later hard drive generations would announce their demise with an ominous ticking sound, like a deathwatch beetle. Either way, the drive was playing funeral music.

Over my more than 40 years as an IT journalist I’ve had many a drive fail on me. In the old days typically the head would nose dive onto the platter, filling the room with a terrifying banshee screech as it plowed into the surface coating. Later hard drive generations would announce their demise with an ominous ticking sound, like a deathwatch beetle. Either way, the drive was playing funeral music.

And a dead drive was a dead drive. Landfill. Or used to be. Seagate has been exploring ways to make drives self-healing.

ADR stands for Autonomous Drive Regeneration. It’s really an extension of a process that hard drive manufacturers have learnt to employ since the inception. No manufactured platter is perfect and there will always be areas of the magnetic coating that fail to hold data securely. Once the drive has been assembled in the factory, one of the drive controllers first jobs is to hunt down those dud spots and map them out.

During the drive’s later employment in its real-world tasks, the same mechanism is always on the lookout for any dangerously weak areas that may develop in the course of use. They will be added to the list of out-of-bounds zones, any existing data on them having been lifted off and transferred to virgin sectors reserved for contingency.

So in a sense, a modern hard drive is always in a state of “autonomous drive regeneration”. But the process I’ve described here can’t revive a head-crash drive. ADR can.

With ADR, the drive controller detects the failed platter surface (not hard to do), maps that out and then rethinks its entire platter surface resource to recreate itself as brand new drive, albeit with a reduced capacity. Ten years ago, with platters limited to four or six, a head crash writing off a surface could reduce the capacity significantly by around 10 per cent. But after a head-crash on one of its surfaces, today’s 10-platter drive could theoretically come back to life with 95% of its original capacity. A failed 20TB drive goes back to work as a still very useful 19TB drive.

Of course, there’s no reserved contingency area on the drive to rescue the terabyte of data that was on that crashed surface. The “ADR self-healing” applies to getting the drive back into service as a hardware component. But once that’s done, ADEPT can now do its cunning thing, whipping up a quick copy of the failed drive onto the virtual hot-swap drive and then in its own time, in the background, restoring the data onto the physical hard drive.

But that wouldn’t work with any conventional RAID system. Among its 20TB peers, this newly resurrected 19TB drive would be a lost lamb. Although CORVAULT’s ADAPT likes to start with an array of identical capacity drives, it’s also always ready to—er—adapt to any changes like this. And welcome the lost lamb back to the tribe.

Winding Up and Coming Down to Earth

Hard drive developments like the use of helium and HAMR, offering capacities in excess of 22TB, are exactly what enterprise and data centres are looking for, continuing greatly to lower the cost of capacity and power consumption. And promising to go on doing so far into the future.

But will consumers benefit from these technologies too? In five years time a top of the range hard drive will very likely cost the same as today’s 22TB drive, around £500, but is set, at the least, to double the capacity. It will be the best deal for customers on a bangs-per-buck basis.

I’m dubious, though, that consumers in 2028 will have much use for drives this size. Already the trend among consumers is to solid state storage. We’re clearly happy to pay twice the price of hard drive storage for faster and in general physically very much smaller solid state drives. I suspect most of us feel we don’t at present need 22TB for our domestic data storage and are happy to settle for what our laptops and desktop machines provide.

I’m dubious, though, that consumers in 2028 will have much use for drives this size. Already the trend among consumers is to solid state storage. We’re clearly happy to pay twice the price of hard drive storage for faster and in general physically very much smaller solid state drives. I suspect most of us feel we don’t at present need 22TB for our domestic data storage and are happy to settle for what our laptops and desktop machines provide.

That may well change. Over the course of my IT lifetime I’ve gone from needing to store phone numbers, to storing music tracks, to storing movies, a journey from kilobyes to megabytes to gigabytes. Future consumer needs may well include an always-on personal diary of holographic recordings, taking us on through terabytes to petabytes.

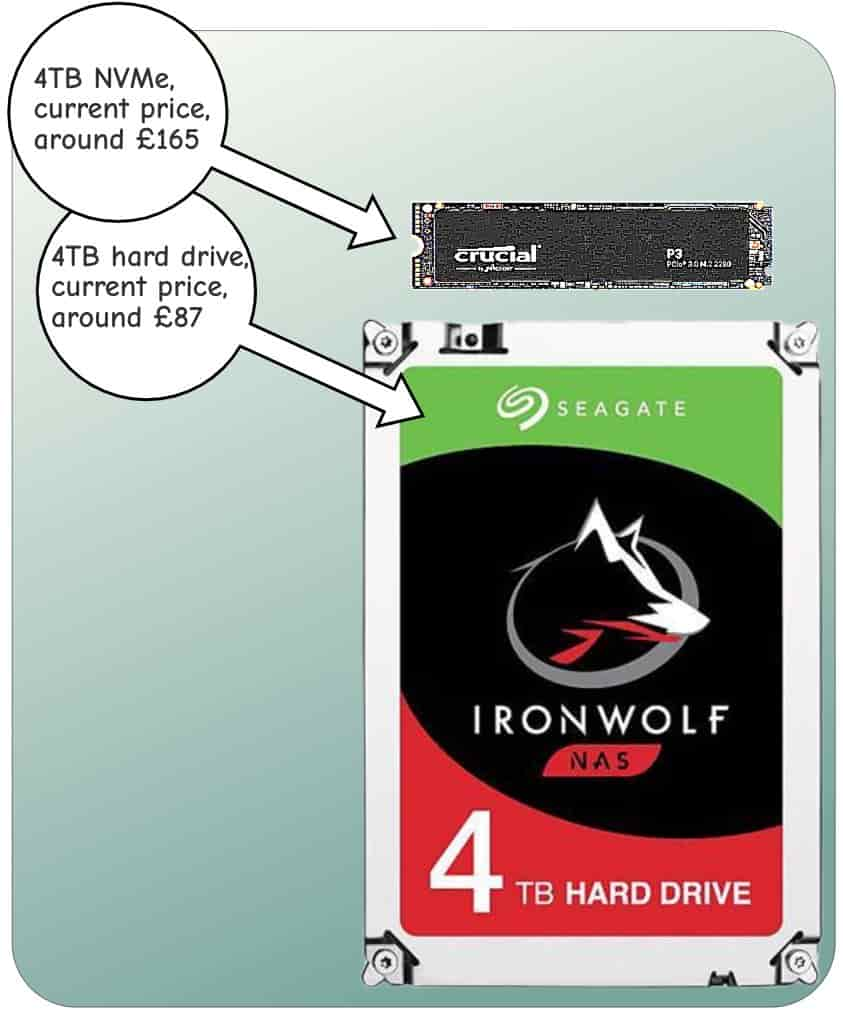

But today I suspect that the average consumer would be more than happy with, say, 12TB of local storage for their personal data. The latest 24TB HAMR drive you buy next year will cost you around £500. But if you’re only going to be using half of its capacity, 12TB, you might consider spending your £500 on three 4TB NVMe solid state drives (like the Crucial P3 4TB M.2 PCIe Gen3 NVMe, currently retailing at £165 each).

A 12TB conventional hard drive of the same quality (old school, from six years ago) is yours today for a little over £200. Prices of these (newly manufactured but older design) drives will continue to fall. But I have to wonder at what point they will stop being worth manufacturing. As 50TB becomes attainable towards the end of this decade, will drive manufacturers have any incentive to continue selling 3½” spinning rust devices with the sort of capacities consumers will need?

By that time it seems likely that solid state drives, even if they remain twice the price per gigabyte of hard drives, will dominate the consumer market. Large server farms may continue to spin rust. And if they are by then offering remote storage to consumers at enticing prices over increasingly speedier Internet connections there will be even less reason to spin rust at home.

But there’s one other factor that may dominate the equation. Voices on the solid state side of the storage industry are suggesting that hard drives are destined to disappear from the market altogether. The rapidly declining cost of solid state storage has its part to play. But the chief reason, according to executives at Pure Storage, a Santa Clara, California company specialising in the manufacture of enterprise-class solid state storage devices, is the rising cost of electricity.

Hard drives are extraordinary devices, a challenging combination of electromechanical-manufacturing and nano-engineering. If the predictions for HAMR come true (and this section of the IT industry has a track record of accurately fulfilled road maps), hard drive vendors will increasingly find themselves selling ever larger capacity devices at a rapidly shrinking price per gigabyte.

But to an ever smaller market. That may—if the pundits at Pure are to be believed—disappear before the end of this decade.

However, Seagate seems confident that their hard work and diligent research will continue to pay off long after that. They’re in for a fight, no question, and devices like the CORVAULT are key weapons in that fight.

P.S.

Although it seems obvious that the essentially mechanical nature of hard drives must dictate higher energy use, the data storage company Scality has been carrying out practical tests that seem to contradict this idea. Scality points out that its products work across the range of different types of data storage, “so we don’t have a horse in this race”.

Although it seems obvious that the essentially mechanical nature of hard drives must dictate higher energy use, the data storage company Scality has been carrying out practical tests that seem to contradict this idea. Scality points out that its products work across the range of different types of data storage, “so we don’t have a horse in this race”.

Scality’s conclusion is surprising: although at idle HDDs consume 15% more power than SSDs, HDDs reading data use only two-thirds of the energy burned up by SSDs for the same function.

The advantage of HDDs during the write cycle is much larger. This is because SSDs take more energy to write data than to read it whereas HDDs use very much less energy on the write than the read. Scality’s results show that HDDs burn up only one-third of the energy used by SSDs to write the same amount of data, even though SSDs get the job done much faster.

| Power data per drive | SSD | HDD | HDD Advantage |

|---|---|---|---|

| Idle (watts) | 5 | 5.7 | -15% |

| Active read (watts) | 15 | 9.4 | 37% |

| Active write (watts) | 20 | 6.4 | 68% |

| Read-intensive workload (avg. watts) | 14.5 | 8.7 | 40% |

| Write-intensive workload (avg. watts) | 18 | 6.6 | 63% |

| Power-density read-intensive (TB/watt) | 2.1 | 2.5 | 19% |

| Power-density write-intensive (TB/watt) | 1.7 | 3.3 | 94% |

| Data supplied by Scality | |||

Chris Bidmead 02-Aug-23