I didn’t intend to switch hearing aid brands. It happened like this:

My GP initially directed me to the NHS audiological department at North Finchley Memorial Hospital, which, as I subsequently discovered, actually outsources to Specsavers.

After a month or so with Specsavers, I asked to be transferred to Guy’s Hospital at London Bridge. The Guy’s Dental Unit has been looking after my teeth for over a decade now, and I’ve been very impressed with the ethos and the meticulous care offered by the NHS staff and students there.

Naively, I expected simply to carry on with my Teneo M+ hearing aids at the new venue. But I quickly discovered that Guy’s has its own choice brands. The Teneos weren’t among them. I would have to switch to another brand and go through the whole process again.

But there was a poster on the hospital wall. Patients who qualified were invited to join an EU-funded project investigating age-related and other hearing loss. Participants would be fitted with a pair of Oticon Opns and be given a smartphone to control them via Bluetooth.

The project was called Evotion. My one question: Where do I sign?

The Evotion Project

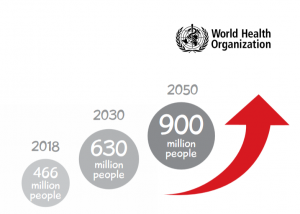

THE IMPETUS BEHIND THE EVOTION PROJECT is the rising worldwide level of hearing loss and our general lack of evidence-based information about how best to deal with it. The technology of hearing aids is now highly developed. Yet research suggests that the 80% of over-55s who would benefit from hearing aids do not use them. Many, even when fitted with hearing aids, end up rejecting them.

Now that we are living longer, the problem of age-related hearing loss (ARHL) is becoming urgent. We have now come to understand that ARHL can lead to word-loss, social isolation and cognitive impairment and can be a factor in some types of dementia.

The Evotion project is accumulating data from over 1200 hearing aid users like me in the UK, Greece and Denmark in the hope of providing insights into these problems. Fitting hearing aids that are comfortable, manageable and recognisably beneficial to the patient may be one of several solutions.

Evotion Hardware

Participants are given a pair of Evotion-adapted Oticon hearing aids together with a Samsung Galaxy A Android smartphone.

![]()

The hearing aids that the Evotion project fitted me with don’t correspond exactly to Oticon’s offerings on the private market. Functionally and physically they appear very similar to Oticon’s top-of-the-range Opn 1 miniRITE. Branding on the battery case reads: “Oticon Evotion 12”. I’m told that from a marketing and regulatory point of view, they shouldn’t be classed as members of the Opn family, although they’re based on hardware and software from early Opn releases.

The opportunity to test these for a year is a privilege I appreciate. At present, certainly in the NHS, these hearing aids are exceptional—in some areas of the UK you can already get Oticons on the NHS but these will be an earlier generation. But the Evotion version of the Opns (I’m just going to call them “Opns” from now on) are worth writing up here because they indicate the direction the technology is going. Among other things, the Evotion project can be seen as a step towards making this happen for everybody.

Oticon’s Opn technology was launched in the summer of 2016. Its chief selling point—described, inevitably, in the marketing bumpf as “revolutionary”—was the idea of “Brainhearing™”. It’s an important idea that we’ll come to later.

The Galaxy smartphone is included for two reasons. Because the Opns are Bluetooth-enabled, they can be controlled from the phone using an app specifically developed for the project. The phone also plays a key part in relaying user data from the Opns back to the Evotion project’s data centre.

The Galaxy smartphone is included for two reasons. Because the Opns are Bluetooth-enabled, they can be controlled from the phone using an app specifically developed for the project. The phone also plays a key part in relaying user data from the Opns back to the Evotion project’s data centre.

When the Opns were first launched in the summer of 2016, Oticon’s marketing department labelled them the “First Internet of Things Hearing Aids”. Yes, they can and do connect to the Internet, but only through the intermediary of another Internet-connected device. This is the role of the Galaxy smartphone.

Other information collected includes the Opns’ estimate of how much of the environmental sound is noise, categorised into four classes: quiet, noise, speech, and speech-in-noise.

Samples are taken once a minute. The Opns themselves are only able to buffer a minute’s worth of data, so information is lost if Bluetooth contact with the smartphone is disconnected. The smartphone has enough memory to hold several months’ worth of data, which means that the loss of Internet connection, even for many days, has no effect on the study.

As well as the Opns and the Galaxy A, the original design of the Evotion project included a wrist activity tracker. The intention was to be able to put the data from the hearing aids in the context of the user’s physical activity at the time. In the event, it seems the Evotion team failed to acquire a manufacturing affiliate. This meant the loss of a useful side-light that would have added another dimension to the project. But the overall impact on the project, I’m told, is small. The activity tracker I’m using today (the MiFi 3) is no part of the Evotion project.

The provenance of the name “Evotion” isn’t clear. But whoever came up with it obviously had failed to do any prior art research. If you look on YouTube for “Evotion” there’s a good chance you’ll find the project. But there’s a better chance that you’ll stumble on the other “Evotion”, described as a “male chastity wearable cage”. This is not the place for a detailed digression—suffice it to say the device is worn on the body at some distance from the ear.

Less is… Less

The Teneo M+ (upper) is larger and heavier than the Oticon Opn.

The Oticons are considerably smaller than my previous Teneos, so small and light, in fact, that I need to put a finger behind my ear to make sure they’re there. Smaller is better, of course. But less is also less.

Primarily, it seems they’re less likely to stay firmly in place. On several occasions, I’ve found an Opn dislodged and dangling by its wire, held only by the grip of the polyurethane dome in my ear canal. The much larger Teneos, on the other hand, had a gravitas that avoided this indignity. Were they more visible? Perhaps. Did I care? No.

My colleague has since had his domes changed to include a whisker (like the one on the Teneo M+ dome in the picture above) which curls like a light spring into the folds of the pinnae and helps to hold the hearing aids in place.

Similarly, a long press on the left or right button will respectively decrease or increase the programme number. The programmes roll round, so an increase from 4 takes you back to 1, and vice versa.

One final, and very obvious sense in which less is less is the question of battery life. The Teneo takes size 13; the Oticon MiniRITE uses the significantly smaller size 312 battery. I find I’m having to change the Oticons batteries every 5 days whereas the Teneos’ batteries would last for over a week and a half.

But it’s not just a matter of battery size. See Thirsty Bluetooth, below.

The Four Programmes

Like the commercial Oticon Opns, the Evotion version offers the user a choice of four different programmes. The Evotion team has set these to be:

- General: 360° BrainHearing programme (see below)

- Noisy: some side and rear attenuation with front focus

- Noisier: more extensive side and rear attenuation with front focus

- Music: minimal automatic volume control and noise reduction

For me, the distinction between these programmes is very slight. I take this to mean BrainHearing* is working well.

BTE versus RIC

The “receiver” in the canal is the blue-tipped component that fits into the dome.

Size isn’t the only physical difference between these new Opns and the Teneos. Although both are “Behind-the-Ear” (BTE), the Teneos are classed as such because that’s where all the operating components are placed. Crucially, the transducer creating the output soundwaves is embedded in the main body of the Teneo, the sound being conveyed into the ear canal through a thin, translucent air tube that is a purely passive component.

Convention—presumably stemming from the origin of the technology in the early days of telephony— calls this output transducer “the receiver”. It sounds topsy-turvy to me (see Box). But we’re stuck with “receiver” because the word is used to differentiate between the sound delivery mechanism of BTE devices like the Teneos and the class to which these new Oticon Opns belong. They’re called “Receiver in Canal” or RICs.

Inevitably, there will be some high-frequency loss in any air tube. And placing the output transducer in the same small chassis as the pair of microphones raises the issue of “howlround”—audio feedback when the microphones pick up some of the output signal. Careful physical positioning of the components and some electronic wizardry can ameliorate this. But moving the output transducer from the chassis to the ear canal is by far the best engineering solution.

*Oticon BrainHearing™

It might sound like a catchy advertising gimmick, but there’s a good deal of science behind Brainhearing. We’ll need to take a brief stroll through the history of hearing aids to put it in perspective.

![]() The first electronic hearing aids, following the invention of the vacuum tube at the start of the 20th century, were purely analogue amplifiers. They did little more than the old mechanical ear trumpet that had been in use since the 17th century. They were smaller and more manageable, but like the ear trumpet, they just made everything louder.

The first electronic hearing aids, following the invention of the vacuum tube at the start of the 20th century, were purely analogue amplifiers. They did little more than the old mechanical ear trumpet that had been in use since the 17th century. They were smaller and more manageable, but like the ear trumpet, they just made everything louder.

![]() By the middle of the century, transistors were beginning to replace vacuum tubes. Devices could now be even smaller, but functionally they were still ear trumpets. They were still mostly amplifying all the sound, noise as well as speech, although with analogue filtering it was now possible to make a coarse-grained distinction between the amplification of different frequencies.

By the middle of the century, transistors were beginning to replace vacuum tubes. Devices could now be even smaller, but functionally they were still ear trumpets. They were still mostly amplifying all the sound, noise as well as speech, although with analogue filtering it was now possible to make a coarse-grained distinction between the amplification of different frequencies.

![]() It wasn’t until transistors evolved into ever smaller components that eventually, by the 1970s, they could be assembled by the thousands onto a single chip the size of your thumbnail. With this radical development, the digital microprocessor was born.

It wasn’t until transistors evolved into ever smaller components that eventually, by the 1970s, they could be assembled by the thousands onto a single chip the size of your thumbnail. With this radical development, the digital microprocessor was born.

This was the change that deserved the term “revolution”. Sound waves could now be transmuted into rapidly changing streams of ones and zeros and those streams could be analysed and modified in real time before being turned back into sound waves. Now it became feasible in small devices precisely to separate out the frequencies and deliver amplification tailored specifically to those frequencies the hearing-impaired ear needed boosting.

Directionality became a valuable factor in separating speech from noise. It was now possible, for example, to focus the sound attention on the area immediately in front of the wearer, minimising sound from the sides and rear, whether these were speech or noise. This aural equivalent of “tunnel vision” was offered as a switchable programme. Later, more sophisticated devices could switch automatically into this mode when the context seemed to demand it.

This is true, natural “brainhearing” and this is what Oticon is attempting to emulate. Oticon’s tools to accomplish this include the virtual array of four stereo pairs that can be constructed from the NFMI-connected dual microphones in each hearing aid, as well as the new capabilities of more recent low-power microprocessors that are now fast enough to deal with complex data sets in real time.

Some important limitations stand in Oticon’s way, however. Microphones situated behind the ear can’t take advantage of the evolutionary cunning of those auricular folds. And, in comparison with the unimpaired ear, important directional information present in the very highest audible frequencies is inevitably lost, beyond the capabilities of the electronics to handle and in any case typically unavailable to the natural mechanism of the impaired inner ear.

Oticon’s Brainhearing is therefore inevitably a compromise. It seems to me evolutionary, rather than revolutionary, an inevitable development arising from technological improvements. But quibbling with the brochure vocabulary isn’t fruitful. The question should be: Is this electronic “brainhearing” useful?

The answer, I suspect, is very much going to depend on the individual. In my own case, it’s a resounding Yes.

Thirsty Bluetooth

Bluetooth has been around for over 20 years now, first introduced as a low-power means of wireless communication between devices. It’s now ubiquitous and remains low-powered in comparison with other well-known wireless protocols like WiFi. But it’s not remotely low-powered enough to earn a place in tiny devices like the Opn hearing aids.

That’s my opinion, of course. But consider this: all of today’s modern hearing aids already have wireless connectivity. They connect with one another using a wireless pathway through the skull running at a megahertz frequency that (unlike the much higher gigahertz frequency of Bluetooth) creates no heat as it passes through human brain cells and poses no biological risk. It also uses a very different aspect of electromagnetic wave propagation.

As you might guess from the name, electromagnetic waves are composed of oscillating electric and magnetic fields. These fields are at right angles to one another. Design of the propagating electronics and the antenna can favour one type of field or another. The ear-to-ear communication of modern hearing aids uses the modulation of magnetic fields to convey the information. The technology is called Near Field Magnetic Induction (NFMI).

NFMI has one big disadvantage over Bluetooth. It’s extremely short-range. Bluetooth, as implemented in phones, works happily up to a distance of over 100 metres. Two metres away from an NFMI transmitting hearing aid the signal will have completely evaporated. Move along, nothing to see here…

Did I say “disadvantage”? Try telling that to the military. On the battlefield, the fewer wires and cables used to connect body-worn equipment the better. Bluetooth would do the job, but would also be a beacon to enemy snipers. NFMI creates an ultra-reliable, secure, highly localised wireless communications bubble, sometimes known as a “body area network” (BAN) that’s undetectable beyond arm’s length. It’s also not subject to electromagnetic interference.

And NFMI is many times (between 5x and 10x) more power efficient than Bluetooth.

NFMI would be the backbone of an ideal BAN if the technology were included in smartphones**. And there are some signs that that may happen. In the meantime, Bluetooth, ubiquitous, thirsty Bluetooth, seems to be the primary choice for smartphone near-field communications.

Watch out for snipers.

The Project and the Product: Conclusions

Recruitment for the Evotion project closed in the autumn of last year and the full trial winds up at the end of this coming October. I’ve set out its general principles but you can read a full technical description of its aims here. It’s turned out to be an exciting and (as I hope is clear from what I’ve written here) deeply fascinating ride.

Recruitment for the Evotion project closed in the autumn of last year and the full trial winds up at the end of this coming October. I’ve set out its general principles but you can read a full technical description of its aims here. It’s turned out to be an exciting and (as I hope is clear from what I’ve written here) deeply fascinating ride.

No, that isn’t really what I want to say about it. I’m failing to convey the quite extraordinary privilege of deriving enormous personal benefit while at the same time feeling I’m being allowed to make a small, useful contribution to what has a chance of becoming a vast social good. Hearing loss begins as a subtly spirit-eroding disability, suffered by five per cent of the world population. The Evotion project is no magic wand. But it is a sensible, scientific path towards a solution.

Oticon

Thanks, too, of course, to Oticon. I can certainly recommend these Opns. One very important distinction (for me) from my previous Teneos is their handling of music. When I switch to Programme 4 I seem to be hearing the full abundance of the orchestra, just as I did in my youth. In fact, the difference from the general purpose Programme 1 is only slight, the noise cancellation and dynamic limiting of that programme being subtly and intelligently tuned to the environment.

Is this performance improvement over my previous Teneos enough to justify the change if I’d had to pay the full price? A better question might be: would I recommend that a UK citizen turn down the offer of free hearing aids from the NHS and instead dip into their piggy bank for the couple of thousand pounds an independent audiologist would charge for the very latest models?

The core problem for hearing aid users seems to me to be that we only have experience of the hearing aids we’ve used and find ourselves totally in the hands of audiologists with a much wider experience, a great deal of knowledge and (in at least some cases) an incentive to upsell us to high profit-margin devices.

The core problem for hearing aid users seems to me to be that we only have experience of the hearing aids we’ve used and find ourselves totally in the hands of audiologists with a much wider experience, a great deal of knowledge and (in at least some cases) an incentive to upsell us to high profit-margin devices.

NHS hearing aids tend to be around one generation behind the current state of the art (but still capable of doing a damn good job). One corollary of this is that what you’ve read here should be relevant to NHS audiology patients for the next five years at least.

The hardware, though, is only part of the equation. The service (shaping the devices to your individual needs, expert follow-ups, battery and spares supplies) I’d argue is even more important to our ongoing welfare. And despite the well-publicised budget struggles, NHS staff in my experience perform magnificently. Compared with the private hearing aid market, the NHS is an absolute bargain (ask any of our Transatlantic cousins).

For those without access to the NHS, or anyone with the cash easily to spare, I can think of no better way of spending that kind of money. We’re seldom fully aware of the extent of our hearing loss. And the improvement to our whole enjoyment of life that well-adjusted, good hearing aids can bring—well, it has to be heard to be believed.

Chris Bidmead