The word “cache” comes from the French, cacher, meaning “to hide”. The concept turns up all through information technology in a number of different contexts and physical implementations. But these all share the idea that something—usually a set of binary data—is being kept somewhere in a “hidden pocket”.

Typically, this will be a bunch of data on its way to somewhere else. The cache pretends to the sending system that the data have arrived at their destination and will at some point relay them on there. In this way, a communication channel that may be slow can be represented to the sending system as being speedy.

As we’ve discussed, all data newly written to the UnRAID array has to have its parity calculated and the result written simultaneously to the parity drive. The delay while this calculation and double drive write happen can make the UnRAID system look slow to accept data. An intervening cache drive, needing none of the parity calculations, can speed up the data transfer. And speed it up significantly if it is a solid state drive (SSD).

I WAS GOING TO START THIS CHAPTER by telling you that the SSD cache would be “adding the final touch” to this UnRAID NAS. That’s quite the wrong way of putting it.

Yes, this chapter is the last planned for this series. But one thing I’ve come to realise during this adventure is that there’s no “final touch” to an UnRAID server. Unlike, for example, Manek’s TrueNAS system, which you design, build and then that’s it—until you need to expand it, a process that will almost certainly demand another design and build. An UnRAID system is elastic, whether you want to expand it, contract it, run other stuff inside it or around it or even move it (as we’ve already seen) from one chunk of hardware to another.

I’ll get onto the addition of the cache drive in a moment. (The truth is, this process is so simple there’s not much to write about). But while we’re philosophising about UnRAID, let me list here some thoughts that have struck me about how it might typically develop for you if you follow this path.

I’ve said that what originally attracted Tested Technology to the UnRAID proposition was being able to start with whatever drives you have to hand: you can shuck them from USB storage devices, you can rescue them from defunct desktops and laptops, release them from the prison of a more stringent NAS. Then you pile them into a pensioned-off PC, run them through the gruelling preclear process and, if they pass, assign them to the array. And now you have a powerful, truly flexible, data storage device.

You may be happy with this. The odd hard drive will fail now and again and you will be able to swap it out for a new one without loss of data. If you take this opportunity to invest in one of the newer larger drives you’ll be able to install that one as the replacement parity drive and reassign the previous parity drive to duty as a data drive in the array. As drives become larger your array will be able to shrink in drive numbers while increasing in capacity. And all this will be happening dynamically—this is what I’m calling the “organic quality” of UnRAID you won’t find elsewhere*.

Less is more.

Why would you want fewer drives?

Firstly, as we’ve seen, the price hot-spot of hard drives tends to shift to the newer, larger drives, though perhaps not the very newest at their time of arrival on the market. And as the technology develops, the newer drives tend to be more reliable.

Larger drives mean you will need fewer of them for the same capacity. You’ll save on power consumption and you’ll be reducing the points of failure.

You might then reasonably ask me “Why eight bays, then, Bidmead?” Our Plan B started with UnRAID running very nicely on a mere 4-bay device. Why expand this to eight?

The first answer is that the additional drive bays provide room to improve resilience. An extra cache drive mirroring the first to ensure that transient data won’t get lost*. And a second parity drive, not mirroring the first but using a different parity algorithm, would allow for two drive failures without data loss.

There’s another answer for those less concerned about data loss. While many UnRAID builds are based, as we originally intended, around an old Intel PC, those first days with the UnRAID-converted QNAP TS-451 convinced me that hot-swappable drive bays are a great thing to have, particularly if you’re experimenting. And if you have hot-swappable bays, they’re a very useful place to keep drives, whether they’re part of the array or not.

The UnRAID community’s UnAssigned Devices app allows you to use these drives outside the array. So, apart from the two data drives, the parity drive and the cache that currently make up the official UnRAID array, the extra four bays give Tested Technology the option of running a more or less autonomous JBOD server from the same device.

The minor downside of sharing unassigned devices is that you can only share them as entire drives or whole partitions and the shares all have to be public—that is to say, not password protected. This is a limitation in the current 6.8.3 version of UnRAID. But I understand a future version may allow more flexibility in how unassigned devices are presented to the LAN.

That Hidden Pocket

We’ve seen how adding a regular drive to the array is a matter of associating it with an UnRAID logical drive slot. Adding a cache drive follows the same procedure but using a special drive slot called Cache. There’s also a second Cache slot in case you feel you need to mirror the cache.

This is something you might want to do if you’re using some of the new, much cheaper no-name SSDs. Your UnRAID cache arrangement is considered to be part of the array but won’t participate in the parity checking. Its bits aren’t included in the parity calculations, meaning that it makes no contribution to protecting the main data drives and in turn is unprotected itself.

The philosophy seems to be that cache drives aren’t inherently trustworthy and the data they handle will mostly be relatively low volume and transient. According to a schedule you set up, an internal UnRAID function called The Mover (a name that sounds like a 1970s TV series you may have missed) rises from slumber (typically overnight but at any time of your choosing) and shifts the data intended for particular shares into the corresponding parity-protected storage.

The philosophy seems to be that cache drives aren’t inherently trustworthy and the data they handle will mostly be relatively low volume and transient. According to a schedule you set up, an internal UnRAID function called The Mover (a name that sounds like a 1970s TV series you may have missed) rises from slumber (typically overnight but at any time of your choosing) and shifts the data intended for particular shares into the corresponding parity-protected storage.

This is the second key point about the cache. It’s operating on a per share basis, not per drive or universally across the whole array. And although an UnRAID share can be associated with a particular drive, like the normal PC arrangement, conceptually it floats independently across any or all of the drives in the array as you determine when setting up that share.

Whereas a classic RAID arrangement will distribute any and all data across the drives in accordance with its own pre-ordained internal schema, with UnRAID, you get to decide whether a share is going to reside on Drive 1 or Drive 2 or Drive n or all of them or just several of them. And also how or whether directories are to be split between the drives. However you design this, no individual files will ever be split across multiple drives. Good news if you ever need to recover data from a drive disaster.

As system admin, you have choices about how or whether each share uses the cache. Across the LAN, the user will always see those data as being inside the share. But under the covers, they might be on the cache or the array. The per share options are:

-

- No: none of these data will touch the cache

- Yes: all data will be written to the cache and later moved to the array

- Only: all data will be written to the cache and will stay there

- Prefer: data will remain on the cache and any array data for that share will be transferred there

The choices in blue remain untouched by the Mover. The red choices use the Mover.

Installing the Cache Drive

![]()

*Two screws are enough for both 3.5″ and 2.5″ drives. Just as well—these QNAP caddy screws turn out to be expensive and hard to find. Although the thread is common enough, the heads need to be completely countersunk and flat to avoid snagging on the bay rails. I was a screw short and had to grind one down to fit.

No big deal, as I’ve said. The QNAP caddies have screwhole positions for both 3.5″ and 2.5″ drives*. Once installed in the caddy, the 2.5″ cache SSD drive slides in and locks in position like any other drive.

This particular cache SSD, though, is rather special and we came upon it through sheer good luck and timing.

Tested Technology was discussing, via email, the product range that the Californian company OWC offers, mostly expansions and upgrades for Apple users. OWC stands for “Other World Computing” and is built around some interesting ideals that we thought were worth further investigation. Among their product range is a series of SSDs and we asked if they’d fancy contributing one to this UnRAID series.

They came through in spades with a Mercury Pro Extreme 6G, a top of the range endurance SSD that comes with 24/7 online support and a five-year warranty to match the Seagate IronWolfs and the Exos.

I’ve mentioned that if you don’t trust SSDs you can mirror a pair of them as an extra precaution. A close alternative would be to install an SSD that’s much bigger than any capacity you might envisage needing. SSDs wear out and they wear out faster as they approach their capacity. Having plenty of spare wriggle room allows the SSD’s built-in controller to distribute the wear more easily. Manufacturers will typically allocate some 7% of extra space, not cited in the specs and not directly accessible to the user, for this purpose. But nothing beats having very much more space than you’re going to be using.

A third choice might be to ensure your SSD uses RAISE. This is a technology that was developed by the controller design company, Sandforce, about a decade ago. The acronym stands for “Redundant Array of Independent Silicon Elements” and borrows its ideas from RAID.

A third choice might be to ensure your SSD uses RAISE. This is a technology that was developed by the controller design company, Sandforce, about a decade ago. The acronym stands for “Redundant Array of Independent Silicon Elements” and borrows its ideas from RAID.

RAISE controllers work very similarly to RAID 5. An SSD is made up of a number of separate memory components (called “dies”) and RAISE treats these in much the same way as devices in RAID 5 array, spreading the data across multiple dies along with enough parity information to enable recovery from a failure in a sector, page or entire block.

Although SSDs are much less vulnerable to physical mishandling (unlike hard drives, they’re virtually unshockable), the writing and reading of individual bits takes a much heavier toll than on rotating mechanical memory. In fact, individual bits are never written to SSDs—to change just a single bit, an entire block has to be read into temporary memory, the bit changed there, and then the whole block written back to the SSD.

SandForce controllers that implement RAISE are widely used in SSDs across the industry. But consumer-class SSDs tend to leave RAISE switched off in order to offer a larger capacity for the same amount of raw storage.

Drives employing RAISE are said to have a quadrillion fewer uncorrectable bit errors than UnRAISEd drives. That’s in America, where a quadrillion is a mere 1,000,000,000,000,000. The British quadrillion is considerably more expensive at 1,000,000,000,000,000,000,000,000, but that seems to have gone the way of the guinea*.

(*One pound sterling and one shilling (£1.05), for younger readers. The guinea coin was no longer minted after 1816. But up until the Second World War, professional fees and luxury items were still being priced in guineas.)

This OWC SSD seems to be the perfect solution to a single SSD UnRAID setup. It has enormous capacity—2TB—and uses RAISE, so we’re very grateful to OWC for the donation. Of course, you can always implement UnRAID cache with a smaller, cheaper SSD (and they’re getting cheaper fast). But in that case, we’d definitely recommend doubling up with a pair of mirrored cache drives.

Speed Trials

In chapter 1 we looked at the raw read speed of a rotating drive on the array. Now that we’ve added a cache we can examine how this improves the speed of writing to the array.

That last sentence needs a careful think. You’ll recall that any writes to the array using the cache won’t really be using the array at all—it’s simply that the data are going to end up on the array once the Mover has done his thing.

Most of the writing to the UnRAID NAS will be from client devices across the LAN. But I thought it would be a useful start to check the effect of the cache on the data transfer speed from one drive on the NAS to another.

This sort of inter-drive transfer isn’t something you get to do on a regular NAS but is bread-and-butter to UnRAID. There will be files on unassigned devices that you want to transfer to the array; there will be directories split between different drives because you asked UnRAID to do this, but which you now want consolidated onto a single drive. You’ll also want to check across drives for duplicate files and sync directories that are meant to be mirrors of one another.

You do all this kind of work using standard Linux utilities like Krusader (the file manager) and DupeGuru (for deduping). Whereas files and directories on a conventional RAID NAS exist in a “black box” only visible through the WebUI, with UnRAID you can open the side of the fish tank and peer right in.

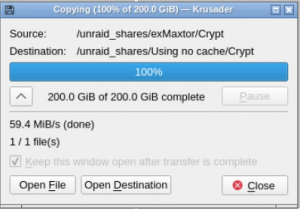

By way of example, here’s Krusader transferring that 200GB VeraCrypt file from one array drive across to another, without using the cache.

We can repeat the exercise using the cache. Although the target directories here are called “Using no cache” and “Using cache”, in fact they’re the same logical UnRAID directory. In other words, I’ve created two shares that land their contents in the same place, one of which, though, diverts incoming traffic through the cache drive.

You’ll see that in this instance the cache provides a significant speed boost of around 40%.

You’ll see that in this instance the cache provides a significant speed boost of around 40%.

I was getting similar results for transfers from unassigned devices to the array. That’s the speed the fish are swimming inside the tank. But, as I say, the most generally useful measurement would be for data transfer speed across the LAN.

This is going to be a looser metric as there are more factors playing into the transfer. The QNAP TS-853 Pro has four Ethernet ports and these can be bonded to produce a theoretical transfer speed of 4Gb/sec. This sounds fast.

But, hold on, these are bits we’re talking about. You can rule-of-thumb divide this figure by 10 to get bytes (8 bits to a byte and two more bits to account for control and check protocols). So those four bonded Ethernet links are at the very best only delivering around 400MB/s. This is approaching SATA III speeds but as we’ve seen, actual disk transfers are well below that anyway. So using the full complement of Ethernet ports wouldn’t provide a bottleneck.

But we currently have the TS-853 Pro wired into the network with only a single Ethernet cable. That’s 100MB/s, tops. It’s not hard to see that unless this 1Gb/sec connection is performing completely transparently it might have some impact on data transfer speeds.

Another factor would be the speed at which the data are pulled off the source device. In this test, we’re using Tested Technology’s 4-bay TS-451+. And in this instance, instead of pulling in a single large file, the UnRAID device will be soliciting a large number of smaller files. These requests will be consuming additional transfer bandwidth. And the TS-451+ will have to hunt out the location of each individual file, another potential drag on the transfer.

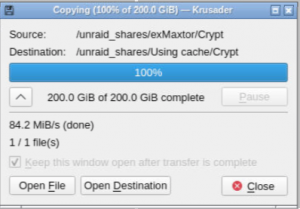

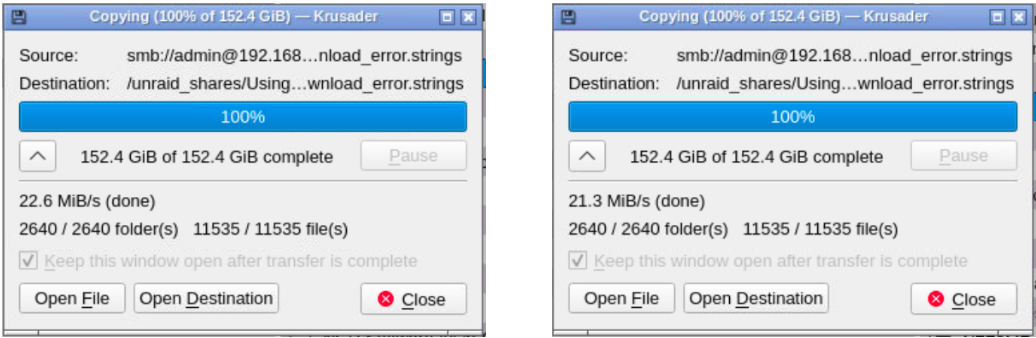

We can use Krusader running inside the UnRAID NAS again for this test as it knows how to attach shares from across the LAN.

Here’s how it pans out.

This surprised me. The figure of 21.3 MB/s on the right is using the cache. Statistically and practically, the difference is too close to call.

But something else was going on with the UnRAID system at the same time. It’s scheduled to run a parity check every month. This has all the drives churning pretty solidly for about a day and a half. Although I don’t see any degradation of services like movie streaming, the WebUI Dashboard shows me that the processor is working very hard. Would this be responsible for the unimpressive cache result?

In fact, no. Tests with and without the parity check running, whether transferring this directory of multiple files or the single 200GB VeraCrypt file, indicated that, for the transfer of files over our 1GB/sec LAN, the cache has no appreciable effect on write speed.

One interpretation might be that Tested Technology needs a faster LAN. But looked at the other way, you might say that these fast-spinning Seagate enterprise drives are a good match for a NAS wired into a 1GB/sec LAN, and setting up a cache is redundant. With slower NAS drives the cache might make a difference.

That’s not to say that our internal SSD has no purpose. We’ve seen how it speeds data transfers inside the NAS, which could be particularly useful for drive-intensive operations like deduping. An SSD is also the ideal location for docker and VM images.

The OWG Pro 6G stays. It’s just that we’re probably not going to be setting our UnRAID shares to “Use cache pool, Yes”.

Shutdown

That’s as a far as I want to take this adventure for the moment. UnRAID is a huge proposition and we’ve only been able to offer you a taste of it in these five chapters. I hope that taste has given you an appetite to investigate further.

If so, there’s plenty of detailed information on the Web. You might want to start with YouTube and I can’t think of a better launchpad than SpaceInvader One’s channel, perhaps kicking off with this one. Other videos on his channel can guide you into the installation and management of dockers and VMs, which we’ve hardly touched on here. The UnRAID Forum is another valuable source of help.

Oh, but wait… There’s more. Prasanna, the eBay guy who put us on to the QNAP solution has offered a supplement about his own new build.

Chris Bidmead: 02-Jan-21