A centralised server in your home or office—usually called a NAS (Network Attached Storage)—can be a great way to make sure that all your information and entertainment material is secure and available wherever and whenever you need it.

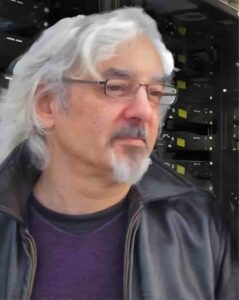

Veteran IT journalist Manek Dubash tells us how you can roll your own server—and why hardware selection isn’t always the most important decision.

When it comes to storage, you can buy a proprietary solution or you can use free software and repurpose old hardware to store your media collection and to act as a backup repository for all your various home devices. And maybe to learn something along the way.

That’s the route Manek lays out for us here.

WE’RE ALL DIGITAL NATIVES NOW—we all have documents, images, videos and music stored in digital form. Just like the rest of life, the stuff piles up. Images sit in cameras, on laptops and in phones. Music files spread themselves across your devices, while videos gobble space on your hard drives, phones and tablets. It quickly turns into a bit of a mess.

Of course, you can always give in and just upload the files into cloud storage. But that means you’ll be paying to access your own possessions forever—or lose them. An alternative is to stream your media from the likes of Amazon or Netflix. But that’s only a partial solution as it doesn’t include your own stuff, and you have zero control over what’s available.

So sooner or later, you need to do something to sort out this mess. The best answer, given the current state of technology, is to install a dedicated storage system in your home. It ticks all the boxes—control over content, control over costs, a centralised bucket for your stuff, and no subscription to pay.

Buy or build?

Having arrived at this conclusion, you might start wondering how to go about it. Here the paths you can take diverge. Either you buy a commercial, off the shelf device—of which there are dozens—or you can build your own. Buying a NAS has the advantage of convenience and simplicity. Sounds good?

A proprietary NAS will offer you a bunch of features—the ones the vendor has decided you need. But what if you wanted to pick and choose your own features? What if you decided you wanted all the best features available—such as a file system that is as robust as they come? What if you wanted to add new functions to the box?

And if you already have an old, superseded computer hanging around why chuck it away? You can put some hard disks and a cheap motherboard in it, connect it to your home network, and you’ve created your own, dedicated NAS. What’s more, re-purposing an old machine is better for the environment than buying anew.**

Commonly, the hardware is a fairly low-powered computer consisting of a motherboard (the circuit board housing the main components, including the central processing unit (CPU), the random access memory (RAM), and probably a fan or two to keep the equipment cool.

Connectivity requires Ethernet, usually with USB to attach external drives and perhaps printers. The rest of the box comprises bays and cables for storage devices—typically NAS-specific hard drives.

Every computer needs an operating system of course, and commercial NAS products are almost always based on a form of Unix—of which Linux is but one, albeit a major, offshoot.

As a buyer of a ready-to-run NAS box, you will—the vendor hopes—never see the underlying operating system because it will be overlaid with a graphical user interface that gives you access to all the functions and features that the vendor believes you will ever need.

And it’s this set of features that often provides the major differentiator between the various proprietary NAS products on the market. In other words, more features command a higher price.

A NAS is a device you typically leave running the whole time, so electricity consumption is something worth thinking about. Old computers can be power-hungry; modern hardware tends to use electricity more efficiently. A dedicated proprietary NAS like the Synology DS120 may turn out to be cheaper and more eco-friendly in the long run.

And if you do want to create your own low-power NAS, building one around the Raspberry Pi could be worth considering, although you would be sacrificing the reliability features Manek is focussing on here.

There will always be a home for your old hardware with charities like the Turing Trust.

Building your own NAS

As you might have gathered, of course I decided to build my own NAS—in fact, not one but two, one to act as a backup to the other. And you could follow my example and do the same thing.

The Xeon E3 1230 is a server class processor launched in 2011 (the year I first built this system). At the time, this single component cost around £200. Today’s pre-loved price is around £35.

The Xeon E3 1230 is a server class processor launched in 2011 (the year I first built this system). At the time, this single component cost around £200. Today’s pre-loved price is around £35.

ECC (Error-Correcting Code ) RAM is important in this context because computer memory can be subject to bit-rot in the same way as disk drives (see data-scrubbing below).

This computer is also designed to be quiet. I’ll confess that this machine is over-powered for what it’s being asked to do but things were different when I bought it.

I also run a backup server—and I’m sure you don’t need to ask why. This one started as a desktop and lives in a large tower case. Like the main server is has room for eight hard disks. It’s based around an Asus B85M-G motherboard from about five years ago. Its 2.7GHz Intel Celeron G1820 is fairly low-powered, but a NAS doesn’t usually need a lot of CPU power. The motherboard will accept up to 32GB of RAM.

When I first built this server, I didn’t see the sense of disposing of a computer for a range of reasons, including financial and environmental ones, and buying a NAS would mean replicating most of the functionality I already owned. If instead I constructed a NAS to my own specifications, I would learn a whole lot more.

Of course, cost does come into this equation. I was prepared to fund the full 32GB of RAM as this wouldn’t restrict my eventual choice of filesystem. These can be very RAM-hungry, if reliability is your main concern.

Reliability also costs disk space. We delve into this issue and the alternatives for the budget-conscious a little later.

Choosing Your Operating System

With the hardware platforms pretty much sorted, the big question was: which operating system? There’s a host of NAS software suites out there, available for free. Some of them sit on top of pre-installed (usually) Linux, while others come integrated with the operating system.

But for me, the real choice was not so much between the various NAS software products as between file systems. This is, if you will excuse the expression, where the rubber meets the road.

If the file system is not as robust as it gets, given the limitations of any underlying storage hardware, then you are increasing the risk of losing data.

The data I’m storing consists of the usual mix of documents, images, videos and music. In particular, I’ve got thousands of pictures I’ve taken over the last 25 years—including close-ups of my bees (I’m a beekeeper too)—as well as those from further back that I’ve digitised. I do not want to lose any of it so, for me, performance is less important than robustness.

The data I’m storing consists of the usual mix of documents, images, videos and music. In particular, I’ve got thousands of pictures I’ve taken over the last 25 years—including close-ups of my bees (I’m a beekeeper too)—as well as those from further back that I’ve digitised. I do not want to lose any of it so, for me, performance is less important than robustness.

The Open Source Advantage

To run the program as you wish for any purpose.

To study how the program works and change it so it does your computing as you wish.

To redistribute copies so you can help your neighbour.

To distribute copies of your modified versions to others .

If it’s good enough for them, it’s good enough for me.

Closed source software on the other hand can’t be examined by users. If it needs changing, if bugs are found or if new features are wanted, you have to rely on the vendor. And if the vendor isn’t willing to make the changes you want, tough…

So, for me, it’s open source all the way—and three survivors were worthy of further exploration.

I narrowed it down to ext4, BTRFS and OpenZFS—all of which are widely available under free licences. Some of the core features that add resilience—in my opinion the most important feature for a NAS file system—are:

- journalling provides resilience to a crash or power outage. The journal is a special file that records the metadata—data about the data to be written. Only when the data has been successfully written is the update tagged as successful, so the file system retains its consistency.

- copy-on-write (CoW) means that during a write operation, blocks containing active data are not overwritten. Instead, the file system allocates a new block and writes the modified data to that. So if the system crashes or something else happens during the write operation, the old data is preserved. It is an alternative approach to journalling when writing data to disk.

- data scrubbing is a process that checks that every bit written to the storage medium is the same as when it was first laid down. This avoids what’s called bit rot, data errors which can occur over time due to glitches in the magnetic medium, cosmic rays or other disruptions.

- snapshotting: a snapshot is the state of a system at a particular point in time. It allows you to restore the file system to the point in time when the snapshot was taken—analogous to a photograph. As well as being an economical way of adding resilience, it can also enable data replication and backing up.

Journalling and CoW are two different approaches to the same issue—restoring the state following a corruption. Journalling’s metadata is stored outside the filesystem whereas CoW is effectively a journal that’s integral to the filesystem.

Some file systems include some of these features, one or two include all of them. We’ve shortlisted three file systems that should be considered for a NAS, chosen for their features, and for their availability and support. Let’s take a look at the resilience features they provide.

- ext4 – the default file system underpinning modern Linux distributions. It features journalling and journal checksums, which can improve performance slightly; a journal keeps track of changes not yet written to disk by recording the intentions of such changes, and helps reduce the likelihood of data corruption in the event of a crash. There are few disadvantages to ext4. It is very widely used and supported, so any problems can usually be quickly sorted out. On the downside, it does not include some of the resilience features that the other two of our shortlisted file systems do.

- BTRFS – includes CoW, data scrubbing and snapshots, as well as checksums on all data. This file system was created to add more ZFS-style features to Linux (compression, snapshots and copy-on-write, for example). The main disadvantage of BTRFS seems to be down to a human rather than a technical issue. It’s not as widely used as its two main rivals, ext4 and ZFS: only a few distributions of Linux include BTRFS by default. Consequently, when technical issues crop up there’s not as much depth of knowledge available. And with ZFS now available on Linux, many might argue that there’s not much of a case to be made for BTRFS.

- OpenZFS is designed from the ground up around resilience, with features including data scrubbing, COW, and snapshots. Its design also differs fundamentally from the other file systems in that it is monolithic: there’s no separate disk format underlying a RAID or other disk aggregation system, so there’s no separate software syntax to learn. On the downside, ZFS works best when operating with lots of RAM – around 1GB per TB of disk data is said to be optimum, although this usually cited with enterprise needs and enterprise-sized budgets in mind. It also slows down once disk free space falls below 20% of the total. This is a consequence of the additional disk space consumed by CoW.

What’s the best file system?

As ever when discussing what’s best, the answer is usually ‘it depends’. However, we’re not weaselling out like that: we’re going to pick one.

BTRFS has been said to offer improvements in scalability, reliability, and ease of management, adding many features such as copy-on-write, pooling and snapshots to increase robustness. However, as the relatively new kid on the block, it has not achieved widespread support on many Linux versions and that would make me pause. If there’s one thing you want in a file system it’s people to turn to if something breaks.

Ext4 is of course fully supported and works fine but does not include by default the resilience features that are available in the other two on our shortlist.

OpenZFS, on the other hand, has it all, with many arguing that it’s among the best if not the best filesystem available. Not only is it free, the OpenZFS project ensures that it stays up to date, and new enhancements are planned. OpenZFS, along with its commercial sibling ZFS (more about this later) is also used in large enterprises, where data loss can cost millions.

FreeNAS and OpenZFS

So while those file systems all have advantages and disadvantages, I chose OpenZFS for its proven robustness for my NAS build.

For me, this narrowed down the choice of NAS software to FreeNAS, which integrates the NAS software, the operating system and the file system and made the choice easy; it sits atop FreeBSD, a version of Unix derived from BSD Unix, developed at the University of California.

For me, this narrowed down the choice of NAS software to FreeNAS, which integrates the NAS software, the operating system and the file system and made the choice easy; it sits atop FreeBSD, a version of Unix derived from BSD Unix, developed at the University of California.

FreeNAS also has a lot of support, both from an active community and from ixSystems, its developer. which also offers a commercial version for enterprise and small businesses called TrueNAS. ixSystems sells TrueNAS in conjunction with its own hardware at prices starting at around $5,500.

FreeNAS, which you can download from here, will cost you nothing. And while nominally expensive in its hardware demands, these can be safely reduced for a home/SMB server.

Of course, I could have elected to run OpenZFS on Linux, which is a fairly new development. But the integration with popular distributions such as Ubuntu is recent—and for this application, I preferred to take a tried and tested route. Bleeding edge technology can be fun, unless your entire digital life depends on it…

Installing FreeNAS was no more difficult than installing any other operating system, and from there it was a simple matter of switching on the required services—such as SMB (or Samba) for sharing with the Windows machines, NFS for sharing with other Linux machines, rsync for client backup and AFP which at the time was needed for Apple shares. I then added shared directories for the various computers scattered around the house.

We now have an enterprise-grade operating and file system, all available for free, running on low-cost hardware. But I’ve missed out a crucial element of the equation: storage. Let’s backtrack.

The “S” in NAS

At the time of building the FreeNAS servers, nine years ago, my media collection occupied around 10TB, with backups from other devices adding around a terabyte.

So I needed to connect the drives to the motherboard and, like most of its kind, the motherboards of neither computer provided enough ports for the number of drives I needed to use.

The “Logic” part of the LSI Logic Controller actually makes it too clever for its own good—in the context of ZFS. That’s a bridge we’ll be crossing in part 2.

So I chose the LSI Logic 9211-8i disk controller, which through its SAS2008 chip offers enterprise-grade reliability and performance. It also provides eight SAS ports, which with the right cables can easily be connected to standard SATA-interfaced disk drives. This controller can be found second-hand for a reasonable price on a well-known auction website. I bought two of them, one for each server.

And that’s the systems as they are today. But I’m running out of disk capacity, so finding and installing the right solutions to this problem is what the rest of this story is all about.

In a nutshell

![]() To conclude, the FreeNAS and OpenZFS combination has proved its worth and validated the original selection decision. Not only has the software been faultless, identifying very early on two drives that showed signs of failure and giving plenty of time to source and install replacements, it’s easy to use, offers all the functionality you’ll need, and allows you to install other functions on the PC as required. And FreeNAS continues to be actively developed.

To conclude, the FreeNAS and OpenZFS combination has proved its worth and validated the original selection decision. Not only has the software been faultless, identifying very early on two drives that showed signs of failure and giving plenty of time to source and install replacements, it’s easy to use, offers all the functionality you’ll need, and allows you to install other functions on the PC as required. And FreeNAS continues to be actively developed.

Manek Dubash: 17-Jul-20

Part 2 is here

Part 2 is here